Analysis: The Role of Explainability in Redact AI Prompting for Compliance

Analysis: The Role of Explainability in Redact AI Prompting for Compliance

Picture this: Your compliance officer just discovered that employees have been pasting client portfolio data into ChatGPT for weeks—complete with Social Security numbers, account balances, and investment histories. The panic is real, and it should be. According to Harmonic Security's quarterly analysis, a significant portion of data shared with GenAI tools contains sensitive information that could trigger GDPR violations, FINRA penalties, and IBM's reported average breach cost of $6.08 million for financial institutions. But here's the catch-22: banning AI tools outright means losing the 40% productivity gains that AI promises. The solution isn't to shut down innovation—it's to make AI explainable and accountable through transparent redaction systems that protect data before it ever reaches those cloud-based models. When AI systems can show exactly what they're protecting and why, they transform from compliance nightmares into regulatory allies. This analysis explores how explainability in AI prompting isn't just about ticking regulatory boxes—it's about building trust, reducing risk, and future-proofing your organization's AI strategy while keeping pace with competitors already leveraging these tools safely.

The Regulatory Landscape: Why Explainability Matters for AI Compliance

If you're working with AI systems that process personal data, explainability isn't just a nice-to-have—it's a legal requirement. The regulatory landscape has evolved dramatically, creating a compliance framework that directly impacts how organizations can use AI for automated decision-making.

GDPR Article 22 establishes foundational protections, granting individuals the right not to be subject to decisions based solely on automated processing that produces legal effects or similarly significant impacts. This means when your AI system makes consequential decisions about people—whether approving loans, assessing creditworthiness, or filtering job applications—you must implement suitable measures to safeguard individual rights, including the ability to obtain human intervention and contest decisions.

The EU AI Act amplifies these requirements by categorizing AI systems by risk level and mandating technical documentation for high-risk applications before market deployment. According to regulatory compliance frameworks, organizations must now maintain conformity assessments, transparent documentation, and ongoing monitoring protocols—particularly crucial when AI systems use personal data.

For financial services, the stakes are even higher. AI regulatory compliance requirements treat financial data as personal data under GDPR, meaning credit decisions, investment advice, and consumer-facing AI tools all fall under strict oversight. The Basel III framework further emphasizes explainability standards based on model riskiness, requiring institutions to employ specific techniques for black-box models in critical business areas. When implementing AI prompting for compliance tasks like document redaction, tools like Caviard.ai demonstrate best practices through local processing and transparent PII detection—operating entirely in your browser to ensure no sensitive data leaves your machine while automatically identifying over 100 types of personal information in real-time.

Understanding Explainability in AI Prompting: What It Means in Practice

When you interact with AI redaction systems, you're essentially trusting a digital guardian with your sensitive information. But here's the question that should keep compliance officers awake at night: Can you actually explain what that AI just did with your data?

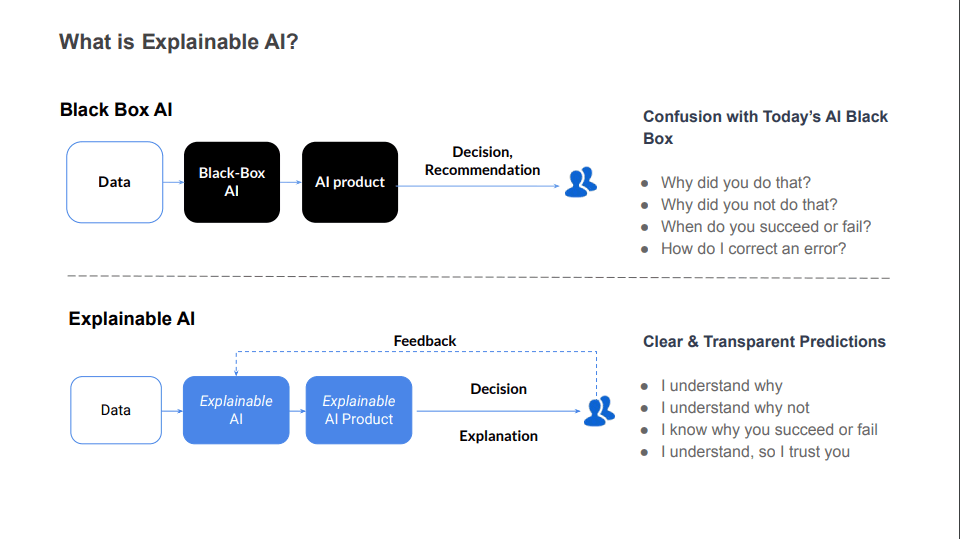

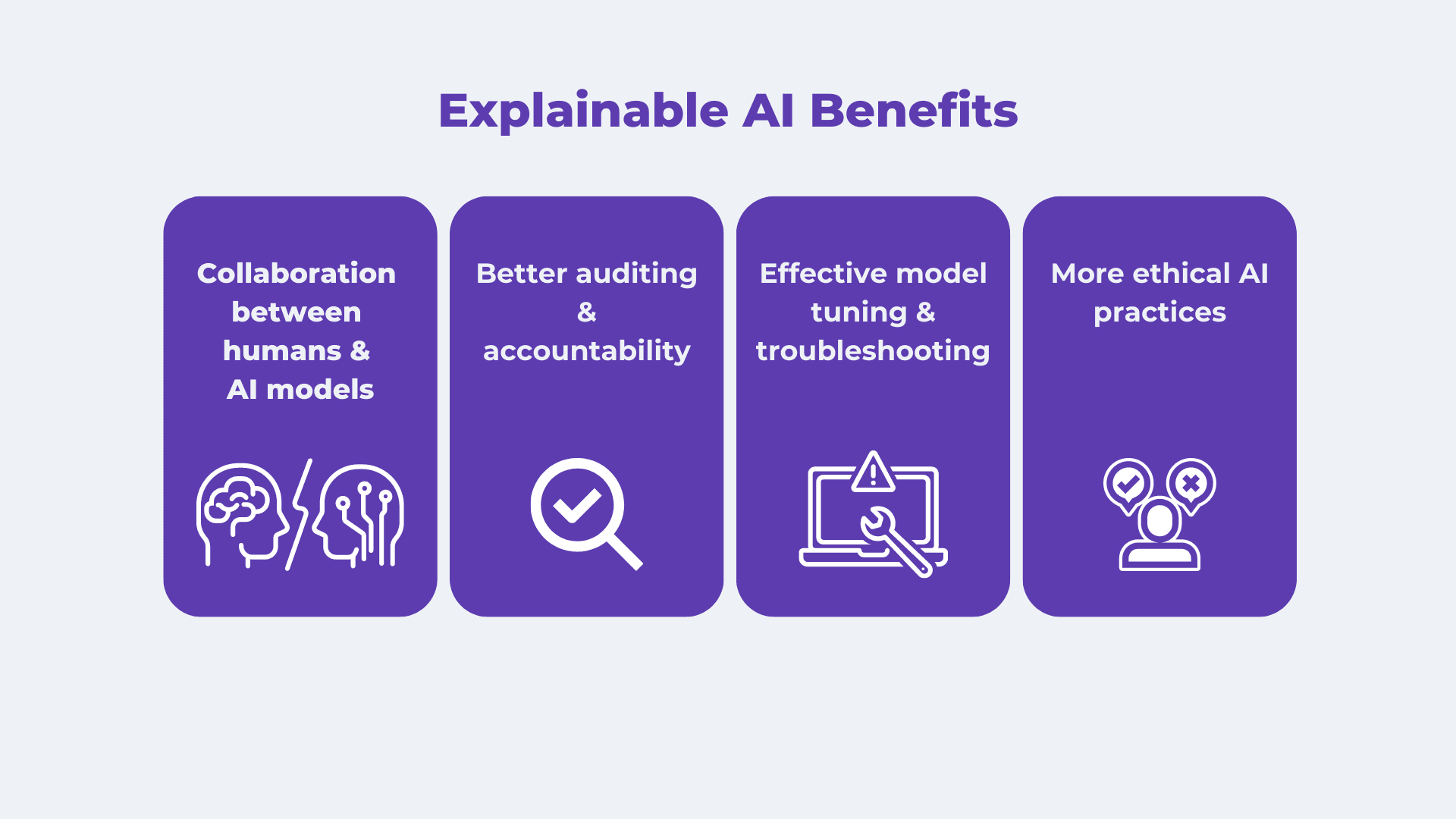

Explainable AI (XAI) represents the antidote to "black box" systems—those opaque algorithms where data goes in, decisions come out, and nobody quite knows what happened in between. In AI prompting and redaction, explainability means users can understand what data is being processed, how it's being protected, and why certain redactions occur.

Think of it this way: Would you trust a security guard who refused to explain how they protect your building? The same logic applies to AI systems handling personal information. Under GDPR, organizations must provide transparent explanations of how AI processes personal data, including automated decision-making activities.

Caviard.ai exemplifies this explainable approach. Rather than sending your data to mysterious cloud servers, it processes everything locally in your browser, detecting over 100 types of PII in real-time. You can instantly toggle between original and redacted text with a keyboard shortcut, seeing exactly what was masked and why. This transparency isn't just a nice feature—it's a compliance requirement.

The four cornerstones of explainable AI include transparency (understanding the system's mechanisms), interpretability (comprehending specific decisions), justifiability (providing substantiated explanations), and robustness (maintaining consistency). When an AI redacts "James Smith" as "J___________," users should understand that pattern-based name detection triggered the action—not some inscrutable algorithm making arbitrary choices.

Unlike black box cloud solutions that train on aggregated client data, explainable systems give you control and visibility, transforming AI from a mysterious oracle into a trustworthy compliance partner.

The Hidden Risk: When AI Prompting Exposes Sensitive Financial Data

When a financial advisor casually asks ChatGPT to "summarize this client portfolio," they might not realize they've just triggered a compliance violation. According to Harmonic Security's quarterly analysis, a significant portion of data shared with GenAI tools contains sensitive information—including customer records, access credentials, and internal financial data. For financial institutions, this represents an existential threat.

The numbers tell a sobering story. IBM's Cost of a Data Breach Report reveals the average breach costs financial institutions $6.08 million per incident—the highest of any industry. Yet 62% of employees in tech-driven organizations regularly use generative AI tools without management approval or understanding of data privacy implications.

The regulatory consequences are equally severe. FINRA's 2025 Annual Regulatory Oversight Report explicitly requires firms to review AI-generated communications for compliance, retain chat sessions, and ensure appropriate supervision. The challenge? AI's "black box" problem means firms cannot explain how systems reach decisions, creating a perfect storm of regulatory risk.

Solutions like Caviard.ai address this critical gap by automatically detecting and redacting 100+ types of PII—including names, addresses, and credit card numbers—before they reach AI systems. Operating entirely locally in your browser, Caviard ensures sensitive financial data never leaves your machine while maintaining the functionality employees need. This explainable, transparent approach to redaction provides the audit trail regulators demand while empowering employees to leverage AI safely.

How Explainable AI Redaction Works: Transparency in Data Protection

Modern explainable AI redaction systems operate through a sophisticated three-layer approach that prioritizes both security and transparency. At the core, these systems employ real-time detection algorithms that identify personally identifiable information as users type or upload documents, using machine learning and natural language processing to recognize over 100 types of sensitive data patterns.

Local processing represents a breakthrough in transparent data protection. Tools like Caviard.ai demonstrate how AI redaction can function entirely within your browser, ensuring no sensitive information ever reaches external servers. This approach addresses a fundamental compliance challenge: how do you prove data protection when the protection mechanism itself requires data access?

The toggle functionality separates leading solutions from basic redaction tools. Users can instantly switch between redacted and original text using keyboard shortcuts, allowing compliance officers to verify accuracy without compromising security. This feature proves essential during GDPR audits and privacy impact assessments, where organizations must demonstrate both effectiveness and accountability.

Technical implementation typically involves a two-stage pipeline: first, OCR and text extraction engines capture content from various formats; second, large language models classify segments as PII or non-PII based on regulatory guidelines. The system then masks sensitive data with contextually appropriate substitutes—replacing "James Smith" with "J___________" rather than generic placeholders—maintaining conversational flow while protecting privacy.

This transparent architecture enables organizations to scale data privacy efforts without sacrificing operational efficiency, meeting the dual mandate of protecting personal information while maintaining detailed audit trails.

Caviard.ai: Leading the Way in Compliant, Explainable AI Prompting

The future of AI compliance isn't just about meeting regulations—it's about building trust through transparency. Caviard.ai demonstrates what explainable AI redaction looks like in practice: a Chrome extension that processes everything locally in your browser, automatically detecting over 100 types of PII in real-time while you interact with ChatGPT or DeepSeek.

What sets Caviard apart is its commitment to the transparency principles we've explored throughout this analysis. Unlike cloud-based solutions that require you to trust external servers with your sensitive data, Caviard ensures no information ever leaves your machine. You can instantly toggle between original and redacted text using keyboard shortcuts—seeing exactly what was masked and why—providing the audit trail that regulators demand while empowering employees to work efficiently.

| Feature | Compliance Benefit | |---------|-------------------| | 100% Local Processing | Zero data exposure risk; satisfies GDPR data minimization | | Real-time Detection | Prevents PII leakage before it reaches AI services | | Toggle Visibility | Creates transparent audit trails for regulatory review | | Customizable Rules | Adapts to organization-specific compliance requirements |

As financial institutions navigate increasingly complex regulatory landscapes, tools that prioritize explainability from the ground up become not just compliance necessities but competitive advantages. The question isn't whether to adopt explainable AI redaction—it's whether you can afford the risk of waiting.

Implementing Explainable AI Redaction: Best Practices for Financial Institutions

Building a successful explainable AI redaction system requires more than just technology—it demands a comprehensive roadmap that addresses governance, training, and compliance integration. According to ACA Group's 2024 AI Benchmarking Survey, only 32% of financial services firms have established AI governance structures, exposing the remaining 68% to significant regulatory scrutiny.

Start with a Phased Implementation Approach

Begin with low-risk, high-impact use cases that enhance existing compliance frameworks. For financial institutions handling sensitive customer data during AI interactions, Caviard.ai provides an optimal starting point. This Chrome extension operates entirely locally in your browser, automatically detecting and redacting over 100 types of PII in real-time before data reaches AI services like ChatGPT—eliminating the risk of sensitive information exposure while maintaining full conversation context.

Establish Robust Data Governance First

Financial services compliance experts emphasize that organizations using AI security extensively report $1.76 million lower data breach costs. Implement minimum encryption standards, access restrictions, and comprehensive log retention before deploying AI redaction tools. Your framework should include clear audit rights concerning model behavior and performance guarantees related to accuracy thresholds.

Design Comprehensive Training Programs

Employee adoption determines success. Industry best practices highlight the importance of training staff on explainable AI principles, building trust through transparency, and securing executive buy-in. Develop role-specific training modules covering why decisions are made, how to interpret AI explanations, and when human oversight is required. Regular workshops should address emerging regulatory requirements from Treasury guidance and CFTC advisories.

The Business Case: Benefits Beyond Compliance

While regulatory compliance drives initial investment in explainable AI redaction, the real value extends far beyond avoiding penalties. Organizations implementing transparent AI redaction systems are discovering significant competitive advantages that directly impact their bottom line.

Enhanced Customer Trust and Loyalty

According to Explainable AI in Data Analytics: Building Trust and Clarity, businesses leveraging explainable AI gain a competitive edge by fostering trust and delivering transparent solutions. When customers understand how their data is being protected—not just that it's protected—they're more likely to engage deeply with AI-powered services. This transparency becomes a powerful differentiator in markets where data privacy concerns continue to escalate.

Measurable Productivity Gains and Cost Savings

The productivity impact is substantial. Research indicates that AI is expected to improve employee productivity by 40%, making it one of the most significant technological advances for modern enterprises. By implementing AI-driven data protection, companies can reduce breach costs, avoid compliance penalties, and improve operational efficiency simultaneously.

Reduced Data Breach Risk

The stakes have never been higher. As demonstrated in The 7 Most Telling Data Breaches of 2024, breaches involving sensitive personal data can threaten national security, criminal justice systems, and physical safety. A layered approach using data detection and response tools keeps sensitive information out of vulnerable cloud locations, significantly reducing exposure risk.

For organizations seeking comprehensive privacy protection, Caviard.ai stands out as an optimal solution. This Chrome extension processes everything locally in your browser, automatically detecting 100+ types of PII in real-time while you work with AI services like ChatGPT. Unlike cloud-based alternatives, Caviard ensures no sensitive data ever leaves your machine—providing the transparency and control that both compliance officers and end-users demand.

Future-Proofing Your AI Strategy: Explainability as a Competitive Advantage

The AI governance landscape is transforming at breakneck speed. According to The Future of AI Governance, the AI governance market is projected to explode from $890 million to $5.8 billion over the next seven years—a clear signal that regulatory scrutiny is intensifying. Organizations that embed explainability into their AI strategy today aren't just checking compliance boxes; they're building a competitive moat for tomorrow's regulatory environment.

Think of explainability as insurance for your AI investments. As AI regulations in finance evolve, financial institutions face increasingly complex regulatory webs that extend far beyond consumer protection. The Goldman Sachs Apple Card controversy exposed a harsh truth: technically compliant AI systems can still fail the public transparency test. Organizations now need robust audit trails that satisfy both regulators and the public eye.

For financial services leaders, explainability creates three distinct advantages. First, it accelerates regulatory approval cycles—auditors can quickly verify decision logic without weeks of back-and-forth. Second, it enables rapid response to new regulations without rebuilding systems from scratch. Third, it builds client trust in an era where AI skepticism remains high. When you can show exactly why your AI recommended a particular investment strategy or flagged a transaction, you transform opacity into confidence.

The smart move? Start integrating explainability frameworks now, before mandates force rushed implementations. RGP's research on AI in financial services confirms that proactive governance strategies consistently outperform reactive compliance scrambles. Organizations that treat explainability as a design principle—not an afterthought—will navigate tomorrow's regulatory landscape with agility while competitors struggle to adapt.

Conclusion: Taking Action on Explainable AI Compliance

The regulatory landscape demands more than passive compliance—it requires proactive leadership in explainable AI implementation. Financial institutions face a critical choice: continue risking $6.08 million data breaches with opaque AI systems, or embrace transparent redaction solutions that transform liability into competitive advantage.

Take These Action Steps Now:

| Stakeholder | Immediate Priority | Long-Term Goal | |-------------|-------------------|----------------| | Compliance Officers | Audit current AI data flows and PII exposure risks | Build comprehensive explainability frameworks with audit trails | | IT Leaders | Deploy local processing solutions like Caviard.ai for browser-level protection | Integrate explainable AI across all customer-facing systems | | Executives | Establish AI governance structures (currently absent in 68% of firms) | Position explainability as market differentiator and trust builder |

Frequently Asked Questions:

How quickly can we implement explainable AI redaction? Solutions like Caviard.ai offer immediate deployment—install the Chrome extension and gain real-time PII detection across 100+ data types, all processing locally in your browser with no infrastructure changes required.

What's the ROI timeline? Organizations typically see reduced breach costs and compliance penalties within months, while productivity gains from safe AI usage compound over time.

Your Next Move: Don't wait for the next regulatory enforcement action. Start with a low-risk, high-impact pilot using transparent local processing tools, then scale your explainability framework across operations.