How to Integrate Redact AI Prompting Seamlessly into Existing Data Workflows

How to Integrate Redact AI Prompting Seamlessly into Existing Data Workflows

Picture this: Your marketing team just pasted a customer list into ChatGPT to draft personalized emails. Within seconds, names, addresses, and purchase histories have been transmitted to servers you don't control—and there's no undo button. This scenario plays out thousands of times daily across organizations rushing to adopt AI tools, creating a compliance nightmare that keeps security teams awake at night.

The challenge isn't whether to use AI—it's how to harness its power without exposing the sensitive data your customers trust you to protect. Redact AI prompting offers a practical solution: automatically detecting and masking personally identifiable information before it reaches AI models, transforming risky prompts into privacy-compliant conversations. This approach lets your teams maintain their AI-powered productivity while ensuring every customer name, financial detail, and health record stays protected.

In this guide, you'll discover a step-by-step framework for integrating redaction seamlessly into your existing workflows—from assessing your current data pipelines to measuring success with concrete KPIs. Whether you're a compliance officer navigating GDPR requirements or a data team leader balancing innovation with security, you'll walk away with actionable strategies that protect sensitive information without slowing down your AI adoption. Let's transform your AI interactions from a privacy liability into a competitive advantage.

Understanding Redact AI Prompting: What It Is and Why It Matters

When you paste customer data into ChatGPT or upload a spreadsheet to an AI assistant, you're potentially exposing sensitive information to systems you don't control. Redact AI prompting is a privacy-first approach that automatically detects and masks personally identifiable information (PII) before it reaches AI models—transforming "John Smith, SSN 123-45-6789" into "J___ S_____, SSN --___" in real-time.

The stakes couldn't be higher. Large Language Models can memorize and leak sensitive training data, creating serious privacy risks for organizations. According to research on AI data security, AI systems must now adhere to strict ethical standards and privacy norms alongside traditional compliance requirements.

Why This Matters for Your Business:

- Regulatory Compliance: GDPR, CCPA, and HIPAA regulations impose strict requirements on how organizations handle personal data in AI systems

- Data Breach Prevention: 60% of large organizations now plan to use privacy-enhancing technologies like data masking in analytics and AI development

- Trust Building: Organizations lack automated systems to monitor AI data usage, making proactive redaction essential

For seamless integration, Caviard.ai offers a browser-based solution that detects 100+ types of PII locally—meaning your sensitive data never leaves your machine. It automatically masks information like names, addresses, and financial details while preserving conversational context, making it ideal for teams already using AI tools daily.

The bottom line: redact AI prompting isn't just about compliance—it's about enabling innovation while maintaining the privacy standards your customers expect.

Assessing Your Current Data Workflow: Pre-Integration Checklist

Before integrating AI privacy tools into your operations, you need to understand where sensitive data lives and flows. Think of this like getting a health checkup before starting a new fitness program—you need to know your baseline first.

Start by conducting a comprehensive PII data audit to identify all personally identifiable information within your organization. This process reveals where customer names, addresses, financial details, and other sensitive data currently exist across your systems. Map every touchpoint where data enters, moves through, and exits your infrastructure.

Next, pinpoint exactly where AI interactions happen. Ask yourself: Which teams use ChatGPT, Claude, or other AI tools? What data do they typically input? Are customer support agents pasting support tickets? Are developers sharing code snippets? According to data privacy compliance experts, identifying these AI touchpoints is critical for compliance and risk management.

Your Pre-Integration Assessment Questions:

- Where does PII currently flow through your organization?

- Which employees regularly interact with AI tools?

- What types of sensitive data appear in AI prompts?

- Do you have existing access controls and audit logs tracking data interactions?

For seamless protection, consider solutions like Caviard.ai, which operates entirely locally in your browser and automatically detects over 100 types of PII in real-time. This Chrome extension masks sensitive data before it reaches AI services, with no setup required and zero data leaving your machine—making it an ideal first layer of defense for organizations just beginning their privacy journey.

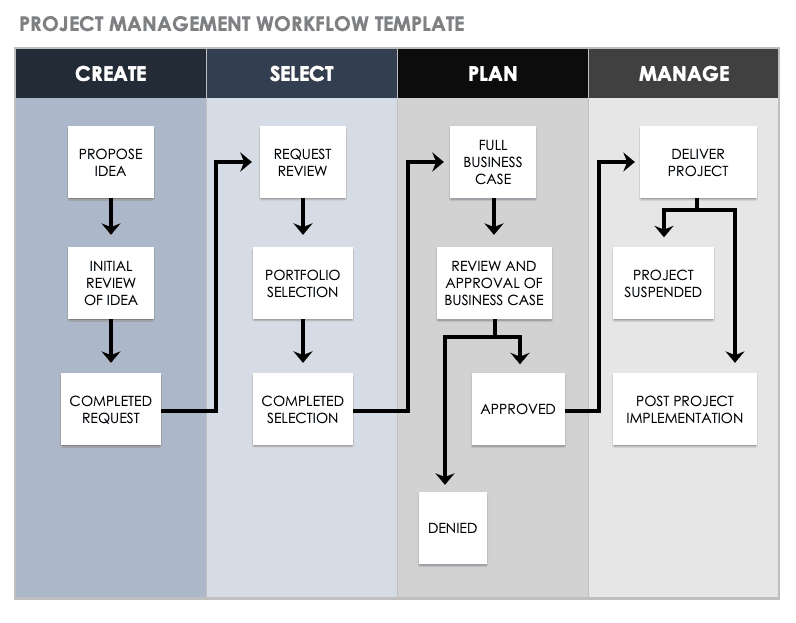

5 Essential Steps to Integrate Redact AI Prompting into Your Data Pipeline

Protecting sensitive information while leveraging AI doesn't have to disrupt your workflow. Here's your roadmap to seamless integration of redaction capabilities into existing data pipelines.

Step 1: Map Your Sensitive Data Touchpoints

Start by auditing where PII enters and moves through your systems. Document every point where customer names, addresses, credit card numbers, or health records appear—from intake forms to database queries to AI prompts. According to a wealth management institution's case study, identifying these touchpoints enabled them to process sensitive information without interrupting business operations while achieving 90-95% accuracy in redaction.

Step 2: Choose Your Redaction Solution

Select tools that fit your technical environment and scale requirements. For teams working with AI services like ChatGPT or DeepSeek, Caviard.ai offers an optimal solution with 100% local processing—meaning your sensitive data never leaves your browser. This Chrome extension automatically detects over 100 types of PII in real-time as you type, replacing them with realistic substitutes while preserving conversation context. For enterprise-scale operations, Strac provides comprehensive data redaction across PDFs, images, and SaaS platforms like Zendesk and Salesforce.

Step 3: Configure Real-Time Detection

Implement automated PII detection using AI-powered recognition. Modern solutions leverage Named Entity Recognition (NER) technologies to identify sensitive data patterns across diverse document formats. Set up regex filters for common identifiers while allowing your AI models to learn and adapt to your specific data types and formats.

Step 4: Establish Prompt Engineering Guardrails

While there are no universal industry standards for LLM guardrails, implement best practices like input/output filtering and secondary validation models. Use post-processing to redact any remaining PII before outputs reach end users, and consider persona switch protections to prevent prompt injection attacks.

Step 5: Build Validation and Monitoring Processes

Create feedback loops that continuously verify redaction accuracy. Log all inputs and outputs for periodic auditing, establish alert systems for potential data leaks, and track performance metrics to ensure your guardrails maintain effectiveness without creating bottlenecks in your workflow.

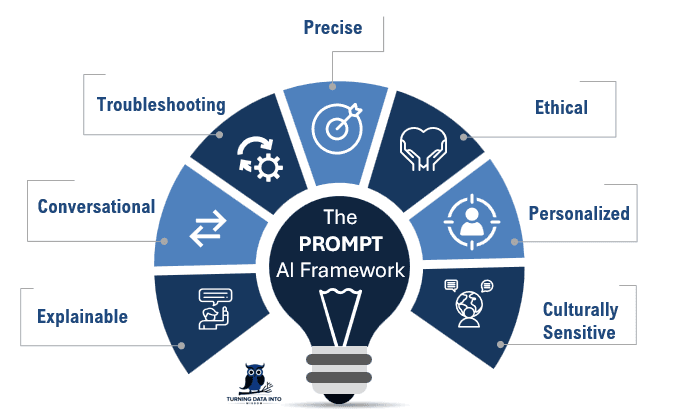

Prompt Engineering Best Practices for Redacted Data Workflows

Working with redacted data requires a fundamentally different approach to prompt engineering. According to Privacy Risks in Prompt Data and Solutions, 8.5% of GenAI prompts contain sensitive data, making strategic masking essential for maintaining both privacy and AI output quality.

Context Preservation Through Strategic Masking

The key challenge with redacted data is maintaining enough context for the AI to generate meaningful outputs. When sensitive information is masked—whether names, addresses, or financial details—your prompts must explicitly provide the context that would normally be inferred. As noted in Prompt Engineering Best Practices, incorporating specific and relevant data into prompts significantly enhances response quality, even when that data is tokenized.

For example, instead of simply redacting a customer name, structure your prompt like: "Analyze the transaction pattern for CUSTOMER_ID_001, a retail customer with 3-year purchase history." This preserves the analytical value while protecting privacy.

Leverage Tools for Automated Protection

Caviard.ai offers an optimal solution for teams working with AI tools like ChatGPT. This Chrome extension automatically detects and redacts over 100 types of PII in real-time—entirely locally in your browser, ensuring zero data leakage. The intelligent masking preserves conversation context while you maintain complete control, toggling between original and redacted text with a simple keyboard shortcut. For organizations handling sensitive workflows, this automated approach eliminates human error while maintaining the natural flow of AI interactions.

Testing Framework for Quality Assurance

According to Mastering Prompt Engineering, implementing Chain-of-Thought (CoT) prompting with multiple iterations helps validate output quality. Run the same redacted prompt at high temperature settings multiple times, compare responses, and use majority voting to ensure consistency despite masked inputs.

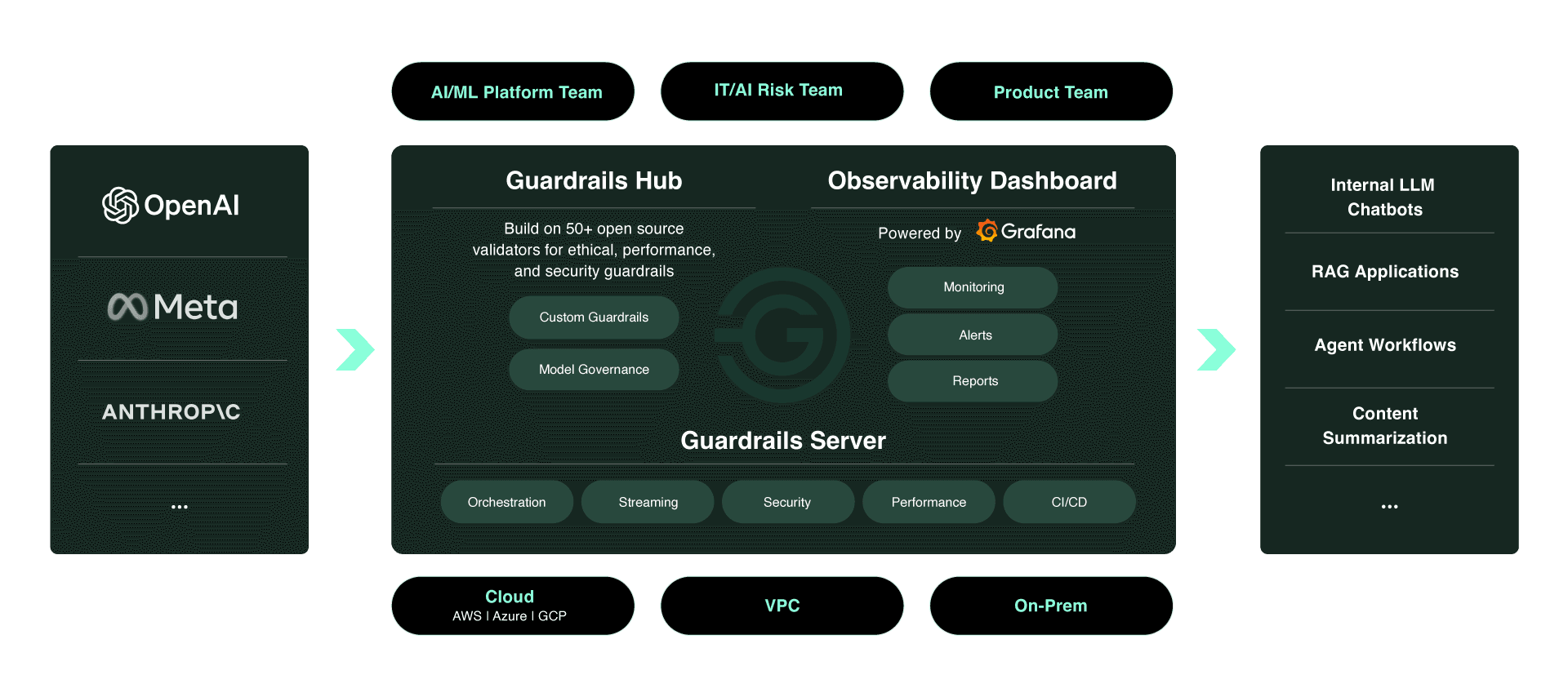

Tools and Technologies: Seamless Integration Solutions

Protecting sensitive information in AI workflows doesn't require complex infrastructure overhauls. Today's PII redaction landscape offers three primary integration approaches, each suited to different organizational needs and technical environments.

Browser-Based Solutions: Real-Time Protection at the Edge

Caviard.ai represents the cutting edge of browser-based PII protection, operating as a Chrome extension that processes everything locally on your machine. This approach automatically detects over 100 types of sensitive data—including names, addresses, credit card numbers, and medical information—in real-time as you type into AI services like ChatGPT or DeepSeek. The brilliance lies in its simplicity: zero configuration required, no data leaves your browser, and you maintain natural conversation flow with AI assistants.

According to research on real-time redaction implementation, this proactive approach has become increasingly crucial as organizations adopt generative AI technologies in enterprise contexts. Browser extensions shine for individual users and distributed teams who need immediate protection without IT infrastructure changes.

API-Level Integration: Enterprise-Grade Processing

For organizations handling high-volume data pipelines, API solutions offer programmatic control. Private AI and Assembly AI lead the market for text, document, and audio file redaction respectively. These tools integrate directly into data workflows, enabling automated PII detection and removal across structured and unstructured data sources before information reaches AI models.

Selection Criteria: Matching Tools to Use Cases

Choose browser extensions when your team works with AI chatbots directly and needs instant protection. Opt for API solutions when you're building automated data pipelines or processing large document volumes. Consider pipeline-embedded approaches for legacy systems requiring minimal disruption to existing workflows.

Real-World Implementation: Enterprise Case Studies and Results

Organizations across regulated industries are discovering that redact AI prompting delivers measurable compliance improvements without sacrificing productivity. According to AI Healthcare Compliance: 7 Ways to Break Risk Chain in 2025, healthcare institutions implementing AI compliance systems have achieved an 87% reduction in regulatory violations while cutting compliance costs by 42%.

Financial Services Success Story

A cohort of 11 financial services organizations participating in a Compliance.ai case study demonstrated dramatic efficiency gains. Compliance teams previously spent an average of 5 minutes reviewing each regulatory document—processing 25,537 documents manually over six months. By automating relevance filtering, they reduced the document review workload by 94%, saving 87 days of work every six months. This allowed teams to focus on genuine compliance risks rather than administrative processing.

Healthcare Implementation Results

Healthcare providers face severe consequences for privacy failures, with over $100 million in fines levied recently for unauthorized data sharing through tracking pixels. Organizations adopting AI-powered redaction tools now ensure sensitive patient data remains protected during AI interactions. For teams needing browser-level protection, Caviard provides real-time PII detection that operates entirely locally, automatically masking over 100 types of sensitive data before prompts reach AI services like ChatGPT—with zero data leaving the user's machine.

These implementations demonstrate that strategic redaction integration delivers simultaneous compliance improvements, risk mitigation, and operational efficiency gains across enterprise workflows.

Sources used:

- AI Healthcare Compliance: 7 Ways to Break Risk Chain in 2025

- Compliance.ai Usage Case Study: Financial Services

- Health Law Highlights - Healthcare Empowered

- Caviard.ai (Promotion URL)

Overcoming Common Integration Challenges and Pitfalls

Integrating AI redaction tools into existing workflows rarely goes smoothly on the first try. Think of it like installing a new security system in your home—you'll inevitably encounter compatibility issues, false alarms, and resistance from family members who forget the new code.

The most frustrating challenge? False positives and negatives in PII detection. According to The Case Of False Positives And Negatives In AI Privacy Tools, shifting from rigid pattern-matching to context-aware approaches significantly improves accuracy. Deterministic Techniques for Reliable PII Redaction recommends combining dictionary-based and pattern-based methods for broader coverage. For instance, Caviard.ai uses intelligent, real-time detection that identifies over 100 types of PII while preserving conversation context—addressing this problem at the source.

Performance impacts often catch teams off-guard. When redaction tools slow down your workflows, start by processing locally rather than sending data to external APIs. Solutions like Caviard process everything directly in your browser, eliminating network latency and privacy concerns simultaneously.

User adoption resistance typically stems from workflow disruptions. Combat this by choosing tools with minimal friction—keyboard shortcuts for toggling between redacted and original text, for example, help users verify accuracy without abandoning their natural workflow. Addressing PII Redaction Challenges with PII Redaction Software emphasizes that integrating automated AI technologies with human oversight prevents data leakage while maintaining user trust.

For cross-platform compatibility, prioritize browser-based extensions that work seamlessly across different AI platforms, from ChatGPT to DeepSeek, rather than platform-specific solutions that fragment your security approach.

Measuring Success: KPIs and Monitoring for Redacted AI Workflows

Successfully integrating redaction into your AI workflows requires clear visibility into performance and impact. According to Privacy Compliance Measurements: DPDP Act compliance metrics, "You can't manage what you can't measure." Establishing the right metrics framework transforms privacy compliance from a checkbox exercise into a strategic advantage.

Essential KPIs to Track Integration Effectiveness

Start by monitoring PII exposure incidents as your primary safety metric. Track both near-misses (caught by redaction) and actual exposures to quantify risk reduction. Data Privacy KPIs: The Executive Guide to Turning Compliance into Advantage recommends tracking incident count, severity, and response time as critical breach metrics that demonstrate your preventative controls' effectiveness.

Redaction accuracy rates measure how well your system identifies and masks sensitive data. For tools like Caviard.ai, which detects 100+ types of PII in real-time locally in your browser, aim for 95%+ detection accuracy with minimal false positives. This Chrome extension operates entirely on your machine, ensuring no sensitive data leaves your browser while seamlessly integrating with ChatGPT and DeepSeek workflows.

Don't overlook AI output quality scores and user productivity metrics. According to research on AI in Employee Monitoring: Balancing Productivity and Privacy, AI-powered monitoring can spot inefficiencies human managers miss while tracking KPIs in real-time. Balance privacy protection with workflow efficiency—if redaction slows teams down significantly, adoption will suffer.

Target 85% or higher privacy compliance across confidential data discovery, redaction, and compliance audits. Build dashboards showing trends over time, not just point-in-time snapshots, and review metrics quarterly to drive continuous improvement in your data protection strategy.

Sources cited:

- Privacy Compliance Measurements: DPDP Act compliance metrics

- Data Privacy KPIs: The Executive Guide to Turning Compliance into Advantage

- Caviard.ai - 100% Local PII Protection

- AI in Employee Monitoring: Balancing Productivity and Privacy

How to Integrate Redact AI Prompting Seamlessly into Existing Data Workflows

You're moments away from pasting sensitive customer data into ChatGPT when a thought stops you cold: What happens to this information once I hit send? Last month, a mid-sized healthcare provider learned the answer the hard way—their AI interactions leaked patient details, triggering a $1.2 million GDPR fine. The irony? They were using AI to improve patient care, not compromise it.

This scenario plays out daily across organizations adopting generative AI. You want the productivity gains and insights AI delivers, but you can't afford to sacrifice privacy compliance or customer trust. The solution isn't abandoning AI—it's integrating redact AI prompting into your workflows so seamlessly that protection becomes automatic, not optional.

This guide shows you exactly how to implement privacy-first AI practices without disrupting your team's productivity. You'll discover practical integration strategies that leading financial services and healthcare organizations are using right now to process sensitive data safely. Whether you're a compliance officer seeking regulatory peace of mind or a team lead balancing innovation with security, you'll walk away with a clear roadmap that works within your existing infrastructure—no complex overhauls required.