How-to: Optimize Redact ChatGPT Prompts for Enterprise Data Privacy Workflows

How-to: Optimize Redact ChatGPT Prompts for Enterprise Data Privacy Workflows

Your marketing team just asked IT to approve ChatGPT for daily use. Simple enough, right? Until you realize that Sarah from sales has already been pasting customer contracts into the free version for weeks, and nobody noticed until now. Every client name, every pricing detail, every confidential term—all potentially floating in OpenAI's training data. This scenario plays out in enterprises worldwide, where the productivity promise of AI collides head-first with the compliance nightmare of data exposure. The gap between what employees want (fast AI insights) and what security teams need (zero data leakage) seems impossible to bridge—until you discover that prompt redaction isn't just a policy checkbox, but a practical workflow transformation. This guide shows you exactly how to implement real-time data sanitization that protects sensitive information while preserving the AI capabilities your teams actually need, turning ChatGPT from a security liability into a compliant business asset.

Understanding the Risk: What Happens When Sensitive Data Meets ChatGPT

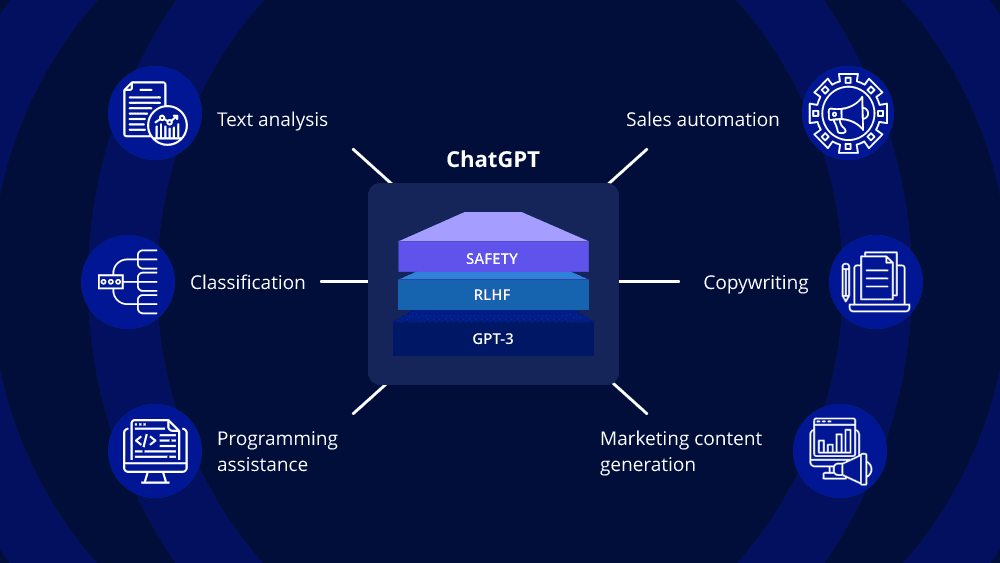

When employees paste information into ChatGPT, they're essentially handing over company secrets to a third party. According to LayerX Security's Enterprise AI and SaaS Data Security Report 2025, a staggering 77% of employees share sensitive company data through AI tools—often through personal, unmanaged accounts that completely bypass enterprise security controls.

Think of it like leaving your office door wide open with confidential files scattered on your desk. The Samsung data leak incident illustrates this perfectly: employees inadvertently exposed proprietary information simply by using ChatGPT for everyday tasks, not realizing their prompts could compromise trade secrets.

What's at risk? Common data exposures include:

- Customer names, addresses, and contact information (GDPR/CCPA violations)

- Social Security numbers and financial data

- Medical records and patient information (HIPAA breaches)

- Confidential business strategies and intellectual property

- Employee performance reviews and salary information

The compliance implications are severe. Healthcare organizations face particularly high stakes, with HIPAA breaches in July 2025 alone affecting millions of patients. GDPR and CCPA regulations carry substantial fines for unauthorized personal data processing, which is exactly what happens when employees paste PII into ChatGPT without proper safeguards.

The real danger? According to LayerX Security CEO Or Eshed, "having enterprise data leak via AI tools can raise geopolitical issues, regulatory and compliance concerns, and lead to corporate data being inappropriately used for training." Once your data enters ChatGPT, controlling where it goes becomes nearly impossible—unless you have the right protection in place from the start.

The Foundation: Data Sanitization and Redaction Strategies for AI Prompts

Before sending any prompt to ChatGPT or other AI services, you need a solid strategy for identifying and protecting sensitive information. Think of it like preparing documents for a public meeting—you wouldn't hand over unredacted files containing Social Security numbers, would you?

Modern data redaction encompasses three core methodologies. Full redaction completely removes sensitive data and replaces it with generic placeholders like [REDACTED]. Smart replacement swaps real values with contextually similar alternatives—replacing "John Smith" with "Person A" maintains conversational flow while protecting identity. Tokenization, the most sophisticated approach, replaces sensitive data with unique identifiers stored separately in secure databases, allowing you to preserve format and even maintain deterministic mapping across sessions.

The challenge? Personally identifiable information (PII) extends far beyond obvious identifiers like names and addresses. Enterprise workflows must detect 100+ data types including credit card numbers, medical records, IP addresses, biometric data, and even behavioral patterns that can identify individuals when combined.

For teams seeking comprehensive protection without configuration overhead, Caviard.ai offers a Chrome extension that detects and masks sensitive information locally in real-time—before prompts reach ChatGPT or DeepSeek. It automatically identifies 100+ PII types while preserving context, and lets you toggle between original and redacted text with a keyboard shortcut.

The critical balance? Context preservation. Your AI prompts must remain effective after redaction. Replace "My client Sarah Johnson needs tax advice" with "My client [NAME] needs tax advice" and the prompt still works—ChatGPT doesn't need the actual name to provide valuable guidance.

Step-by-Step Guide: Implementing Real-Time Redaction in Your ChatGPT Workflows

Protecting sensitive data in your ChatGPT interactions doesn't have to be complicated. With the right approach and tools, you can establish enterprise-grade privacy workflows that automatically sanitize your prompts before they reach AI services.

Choose Your Redaction Strategy: Manual vs. Automated

The first decision you'll face is whether to implement manual or automated redaction. Manual approaches require team members to identify and remove sensitive data themselves—a process that's time-consuming and error-prone. According to 7 Things You Should Never Share with ChatGPT, best practices emphasize using sanitization tools or redaction tools that autodetect PII and replace them smartly so your data is never exposed.

For enterprise workflows, automated solutions are the clear winner. Tools like Caviard.ai provide real-time PII detection that identifies 100+ types of sensitive data—including names, addresses, and credit card numbers—automatically as you type, with all processing happening locally in your browser. This zero-configuration approach eliminates human error while maintaining conversation context.

Configure Your Automated PII Detection Rules

Once you've selected an automated tool, configure detection rules specific to your organization's needs. List of Key Features to Look for in ChatGPT Data Redaction Tools in 2025 recommends implementing automated PII detection and flagging business-critical information patterns.

Most enterprise redaction tools allow you to customize detection patterns beyond standard PII categories. Add company-specific patterns like internal project codes, proprietary terminology, or customer identification formats. This ensures comprehensive protection tailored to your unique data landscape.

Before and After: Redaction in Action

Let's examine how effective redaction transforms a risky prompt into a privacy-safe one:

Before Redaction: "Hi, I need advice on a financial issue. My name is James Smith and I live at 123 Main Street, New York. My credit card 4782-5510-3267-8901 was charged..."

After Redaction: "Hi, I need advice on a financial issue. My name is J_______ and I live at 1____________, . My credit card 4-__--__ was charged..."

Notice how the redacted version preserves conversational context while eliminating exposure risk. According to Data Redaction: The Essential Guide to Protecting Sensitive Data, businesses can effectively remediate any instances of unprotected sensitive data while keeping files and their regulation-safe information for future use.

Establish Pre-Prompt Sanitization Checklists

Even with automation, implement verification checkpoints. Create a lightweight pre-submission checklist:

- Verify automatic redactions look reasonable

- Check for context-specific sensitive terms unique to your industry

- Confirm toggle functionality works (switch between redacted and original)

- Review conversation history for any missed PII

How to Redact ChatGPT Data Using Open Source Privacy Tools emphasizes utilizing privacy-focused platforms that offer automatic data sanitization and implementing regular conversation reviews.

The key is making privacy protection seamless—tools like Caviard work automatically with no configuration required, providing comprehensive protection while maintaining productivity. Your team can focus on getting valuable AI insights without constantly worrying about data exposure risks.

Enterprise-Grade Solution: Why Caviard.ai is Purpose-Built for Prompt Security

Think of enterprise data protection like insurance—you don't appreciate it until you desperately need it. The difference between wishful thinking and real security comes down to one question: Does your redaction solution actually work in the moments that matter most?

| Feature | Caviard.ai | Manual Redaction | Competitor Tools | |---------|-----------|------------------|------------------| | Processing Location | 100% local browser | Human review required | Often cloud-based | | PII Detection Types | 100+ automatic | User-dependent | 20-50 average | | Setup Complexity | Zero configuration | Training required | Complex setup | | Real-Time Protection | As you type | After drafting | Pre-submission only | | Toggle Functionality | Instant keyboard shortcut | N/A | Limited or none |

Here's what sets Caviard.ai apart: everything happens in your browser, period. No data touches external servers, no API calls expose your information, and no configuration hassles slow down deployment. While competitors route your sensitive data through cloud processing or require IT teams to configure detection rules, Caviard operates entirely locally—automatically identifying names, addresses, credit card numbers, and 100+ other PII types the moment you start typing.

The toggle functionality proves particularly valuable during audits. Switch between original and redacted text with a single keyboard shortcut, demonstrating both AI usage and protection measures to regulators without compromising actual data. This seamless approach solves what manual processes and complex enterprise tools struggle with: making privacy protection effortless enough that employees actually use it consistently. When security becomes invisible, it becomes unbreakable—and that's exactly the enterprise-grade protection your organization needs.

Best Practices: 7 Essential Rules for Secure ChatGPT Prompt Engineering

Protecting enterprise data in AI workflows isn't just about having a policy—it's about implementing practical safeguards that work every single time. Here are seven battle-tested rules that security-conscious organizations are using to minimize data exposure risks in 2025.

1. Never Upload Sensitive Documents Directly

Treat AI chat interfaces like public forums. Instead of pasting entire contracts or financial reports, extract only non-sensitive excerpts. According to SaaS Privacy Compliance Requirements guidance, every vendor accessing personal data needs specific security measures and processing agreements—and that includes AI platforms.

2. Implement Automatic PII Detection

Manual redaction is error-prone and time-consuming. Caviard.ai provides a browser-based solution that automatically detects and masks over 100 types of sensitive data in real-time, operating entirely locally without sending information to external servers. This Chrome extension catches names, addresses, credit card numbers, and other PII before they reach ChatGPT or similar services, maintaining context while preserving privacy.

3. Use Pseudonyms and Generic References

Replace "Sarah Chen from accounting" with "Team Member A." Substitute "Project Phoenix budget" with "Q2 initiative costs." This simple habit dramatically reduces exposure if prompts are inadvertently logged or shared.

4. Deploy Mandatory Sanitization Checklists

Create pre-submission workflows that employees must complete. As emphasized in Enterprise AI Governance implementation guides, organizations need identity management, data loss prevention tools, and governance workflow systems for risk assessments before AI interactions.

5. Establish Regular Audit Procedures

According to Data Privacy Compliance 2025 requirements, continuous monitoring isn't optional—it's essential for maintaining user trust and avoiding penalties. Review AI chat logs quarterly, checking for patterns of sensitive data exposure.

6. Implement Data Classification Frameworks

Not all information carries equal risk. PII compliance frameworks emphasize knowing exactly where sensitive data lives, how it moves, and who can access it. Tag data as public, internal, confidential, or restricted, and establish clear rules about what can enter AI prompts at each level.

7. Monitor AI Usage Patterns Across Platforms

As noted by Data Privacy Day 2025 industry experts, advanced access control systems and monitoring tools help prevent unauthorized access while maintaining compliance with evolving data protection standards.

Real-World Success: Case Studies from Customer Support and Enterprise Teams

Organizations worldwide are discovering that AI adoption without proper data protection creates more problems than it solves. The shift toward prompt redaction isn't just a compliance checkbox—it's enabling measurable business transformation across customer support, IT operations, and enterprise workflows.

According to AI Productivity Statistics, employees using AI tools report an average 40% productivity increase, while 77% of C-suite leaders confirm tangible gains. However, these benefits only materialize when teams can confidently use AI without compromising sensitive data. This is where prompt redaction tools like Caviard.ai become game-changers—enabling teams to harness AI's full potential while maintaining enterprise-grade data privacy.

Customer service teams face a particularly acute challenge. Representatives handling sensitive inquiries need AI assistance but cannot risk exposing customer PII. Organizations implementing automatic redaction report maintained compliance rates while achieving the productivity gains their competitors sacrifice for security. One pattern emerges consistently: teams using browser-based redaction extensions like Caviard experience faster AI adoption because employees trust the privacy controls operating locally in their browsers.

Financial services and healthcare organizations demonstrate the most dramatic outcomes. By deploying prompt redaction that detects 100+ types of sensitive data in real-time, these highly regulated industries achieve what seemed impossible—leveraging ChatGPT's capabilities without creating compliance risks. IT operations teams report similar success, using redacted prompts to troubleshoot systems containing customer data without manual sanitization delays. The toggle functionality that switches between original and redacted text proves particularly valuable during audits, demonstrating both AI usage and protection measures to regulators.

Conclusion: Building a Privacy-First AI Culture with Smart Redaction

The path forward for enterprise AI adoption isn't choosing between innovation and security—it's implementing systems that deliver both. Organizations successfully leveraging ChatGPT and similar tools share a common strategy: automatic, real-time data protection that operates invisibly alongside productivity gains.

Key Takeaways for Security Leaders:

- Implement browser-based redaction that catches 100+ PII types before prompts reach AI services

- Establish clear data classification frameworks determining what information can enter AI workflows

- Deploy automated detection systems rather than relying on manual employee vigilance

- Create audit trails demonstrating both AI usage and protection measures to regulators

The Samsung data leak and countless similar incidents prove that good intentions aren't enough. Your teams need tools that make privacy protection effortless—not another security policy they'll bypass under deadline pressure.

Caviard.ai's Chrome extension represents this practical approach: zero-configuration protection that automatically masks sensitive data locally in your browser, preserving conversation context while eliminating exposure risk. With toggle functionality to switch between original and redacted text, teams maintain productivity without sacrificing privacy.

Ready to protect your enterprise data without slowing down AI adoption? Install Caviard's free Chrome extension today and experience comprehensive PII protection that works automatically across ChatGPT, DeepSeek, and other AI platforms—all while keeping your data entirely on your machine.