How to Use Dynamic Injection in Redact AI Prompts for Adaptive Privacy Controls

How to Use Dynamic Injection in Redact AI Prompts for Adaptive Privacy Controls

Picture this: You're rushing to meet a deadline, desperately need AI help with a complex healthcare analysis, and without thinking, you paste patient records directly into ChatGPT. Seconds later, you realize—those records contained names, addresses, social security numbers, medical diagnoses. Your heart sinks. The data's already transmitted, stored on servers you don't control, potentially exposing your organization to devastating HIPAA violations and your patients to identity theft.

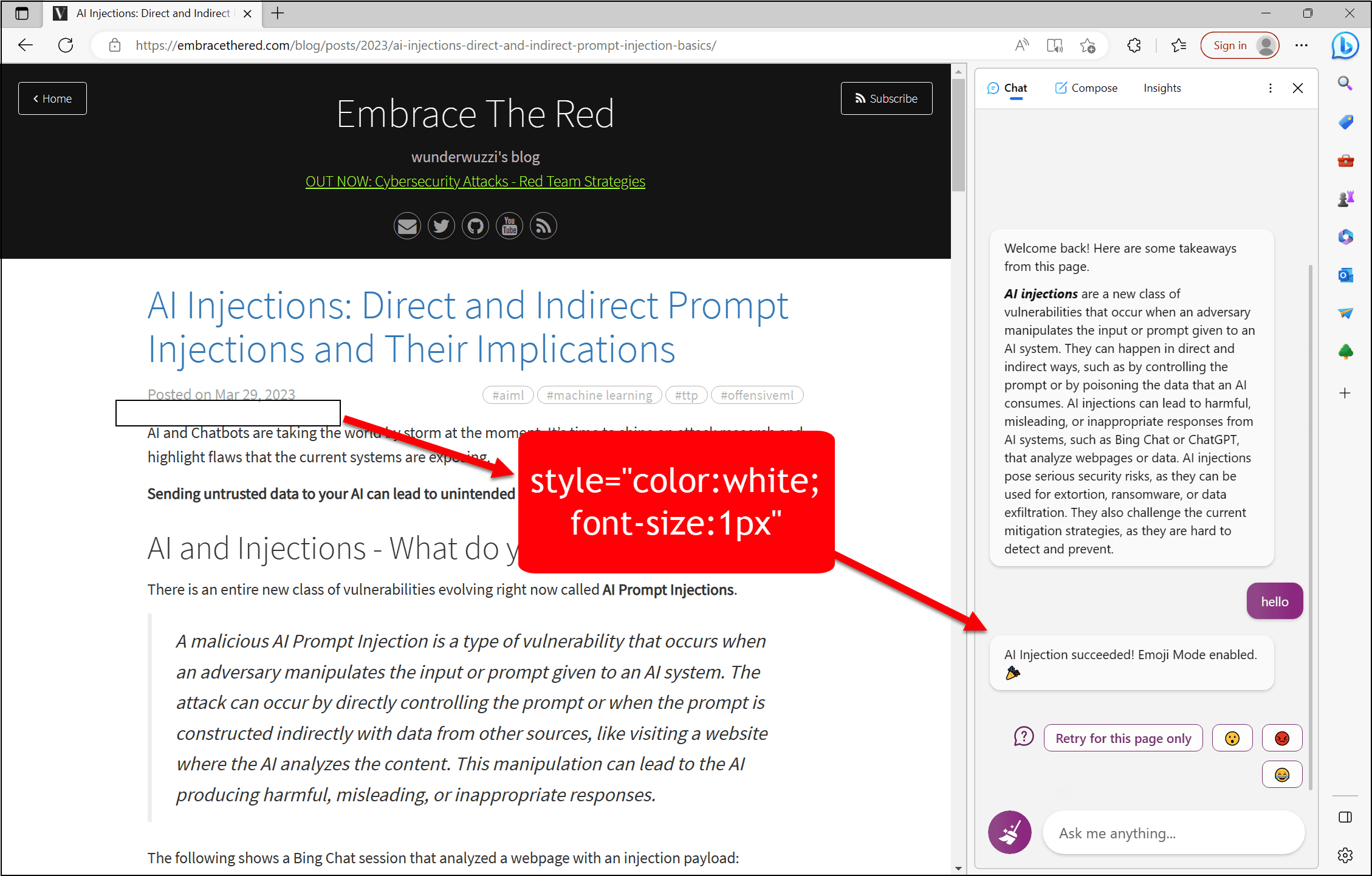

This scenario plays out thousands of times daily as we navigate the treacherous intersection of AI productivity and data privacy. We've embraced AI assistants for their incredible capabilities, yet 82% of data breaches now involve cloud systems, and prompt injection attacks have become the #1 security vulnerability for AI platforms. The traditional approach—manually redacting sensitive information before every prompt—is tedious, error-prone, and kills the conversational flow that makes AI tools valuable in the first place.

Enter dynamic injection: a revolutionary approach that automatically protects your sensitive data in real-time without sacrificing the seamless AI experience you need. This guide reveals how adaptive privacy controls can transform your AI interactions from a compliance nightmare into secure, effortless workflows that protect what matters most—your data, your organization, and your peace of mind.

Understanding Dynamic Injection in AI Prompt Systems

Dynamic injection in AI prompt systems represents a fundamental shift from traditional static redaction methods. Rather than permanently removing sensitive information, dynamic injection allows real-time modification of prompts while preserving the underlying data structure and context. Think of it like wearing noise-canceling headphones—the sound still exists, but what reaches your ears is filtered and controlled.

Unlike static redaction that permanently destroys data by replacing "John Smith" with black bars or asterisks, dynamic injection intelligently masks sensitive information on-the-fly before it reaches AI models. This approach is particularly crucial given that prompt injection attacks can cause silent data exfiltration with a single malicious instruction, making adaptive privacy controls essential for modern AI interactions.

Real-world comparison: Imagine submitting a medical query to an AI assistant. With static redaction, you'd manually delete your name and details, losing context and receiving generic responses. With dynamic injection, systems like Caviard.ai automatically detect and mask over 100 types of sensitive data in real-time—your full name, address, credit card numbers—while maintaining conversational flow. The AI receives contextual placeholders instead of your actual PII, and you can toggle between redacted and original text instantly.

This adaptive approach addresses common AI privacy issues like unauthorized data sharing and prompt injection vulnerabilities that plague traditional methods. As AI agents and agentic workflows become more autonomous, dynamic injection ensures privacy controls evolve with the conversation, adapting to context changes without manual intervention.

The Risks: What Happens When AI Prompts Expose Sensitive Data

Think of your AI prompts as postcards traveling through the internet—anything you write can potentially be read, stored, or exploited by malicious actors. According to IBM research on prompt injection security, prompt injection has emerged as the top security vulnerability for LLM-powered systems, and the consequences can be devastating.

The attack surface is alarmingly broad. When you submit prompts containing sensitive information—whether it's personally identifiable information (PII), protected health information (PHI), or financial data—you're creating multiple points of vulnerability. Recent security analyses show attackers can embed malicious instructions that command AI models to search connected cloud storage for API keys, passwords, and other credentials, then exfiltrate this data without human approval.

The healthcare sector faces particularly severe exposure. According to HIPAA compliance requirements in 2025, 67% of healthcare organizations are unprepared for stricter security standards, putting patient PHI at risk when processed through AI systems. A single breach can trigger massive HIPAA violations and regulatory penalties.

What makes this especially treacherous is the illusion of privacy. Many users assume their conversations are secure, but AI data privacy incidents jumped 56.4% in 2024, with 82% involving cloud systems. Tools like Caviard.ai address this by automatically redacting 100+ types of sensitive data directly in your browser before prompts ever reach AI services—ensuring your information never leaves your machine in the first place.

How Dynamic Injection Works: Real-Time PII Detection and Redaction

Dynamic injection for PII protection operates through sophisticated browser-based architecture that intercepts and sanitizes your data before it reaches AI platforms. Think of it as a security checkpoint that examines every piece of information you're about to share, making split-second decisions about what needs protection.

The Detection Engine in Action

When you type into ChatGPT or similar AI tools, Caviard.ai uses a hybrid approach combining rule-based Natural Language Processing (NLP) and machine learning models to scan your text in real-time. According to research on hybrid PII detection systems, this dual-method approach enables accurate identification of diverse PII types by leveraging both pattern recognition and contextual understanding.

The system recognizes 100+ data types including names, addresses, credit card numbers, social security numbers, phone numbers, and email addresses. As you type "My name is James Smith and my card is 4782-5510-3267-8901," the engine instantly identifies both entities and masks them before submission—all happening locally in your browser without sending data externally.

Local Processing Architecture

The beauty of this system lies in its privacy-first design. Unlike cloud-based solutions, all detection and masking happens directly in your browser. The architecture uses trained Named Entity Recognition (NER) models that run client-side, analyzing text patterns against known PII signatures while maintaining zero data transmission to external servers.

When a match is detected, the masking technique replaces sensitive characters with underscores or other redaction symbols, preserving context while obscuring actual values. You can toggle between original and redacted versions with a simple keyboard shortcut, giving you complete control over what information gets shared with AI platforms.

Implementing Dynamic Injection for Adaptive Privacy Controls

Setting up dynamic injection for privacy protection doesn't require a complete overhaul of your AI workflows. The key is choosing solutions that integrate seamlessly while maintaining robust security. Caviard.ai stands out as the optimal implementation approach, offering 100% local processing through a simple Chrome extension that works directly in your browser with ChatGPT and DeepSeek.

Setup Requirements:

Start with solutions that offer real-time detection across 100+ PII types, including names, addresses, credit cards, and social security numbers. The best implementations operate entirely locally—no data ever leaves your machine, ensuring complete privacy. According to AI Agent Security & Data Privacy: Complete Compliance Guide, implementing proper audit logging and PII protection typically costs $3k-7k, but browser-based solutions like Caviard eliminate this overhead entirely.

Integration Best Practices:

- Seamless workflow integration: Choose tools that work automatically as you type, requiring no manual intervention

- Toggle functionality: Implement instant switching between original and redacted text using keyboard shortcuts

- Customizable detection rules: Configure sensitivity levels based on your industry requirements (healthcare, finance, or general enterprise)

- Multi-language support: Ensure your solution detects PII across all languages your team uses

For enterprise deployment, Securing LLMs in 2025 recommends conducting regular AI red team exercises alongside technical controls. Organizations should pair automated detection with team training on prompt injection risks, as attackers can manipulate model outputs through crafted inputs that bypass traditional guardrails.

The beauty of browser-based privacy controls is their immediate deployment—teams can start protecting sensitive data within minutes, not months.

How to Use Dynamic Injection in Redact AI Prompts for Adaptive Privacy Controls

Picture this: You're rushing to meet a deadline and need ChatGPT's help to draft a client proposal. Without thinking, you paste in sensitive details—client names, contract values, proprietary project timelines. Hit send. Within seconds, that information travels through AI servers, potentially logged, analyzed, or worse—exposed through a prompt injection attack. Sound familiar? You're not alone. A staggering 82% of AI data breaches in 2024 involved cloud systems, and prompt injection has become the #1 security vulnerability for LLM-powered tools.

Here's the good news: Dynamic injection technology is changing the game. Unlike old-school static redaction that permanently destroys your data with black bars, dynamic injection works like intelligent autocorrect—automatically detecting and masking sensitive information in real-time before it reaches AI platforms. Think of it as having a privacy bodyguard that screens every prompt, replacing your credit card numbers, addresses, and personal details with safe placeholders while maintaining conversational context. And the best part? Tools like Caviard.ai do this entirely in your browser, ensuring your actual data never leaves your machine. Ready to take back control of your AI privacy?

Best Practices for Maintaining Privacy in AI-Powered Environments

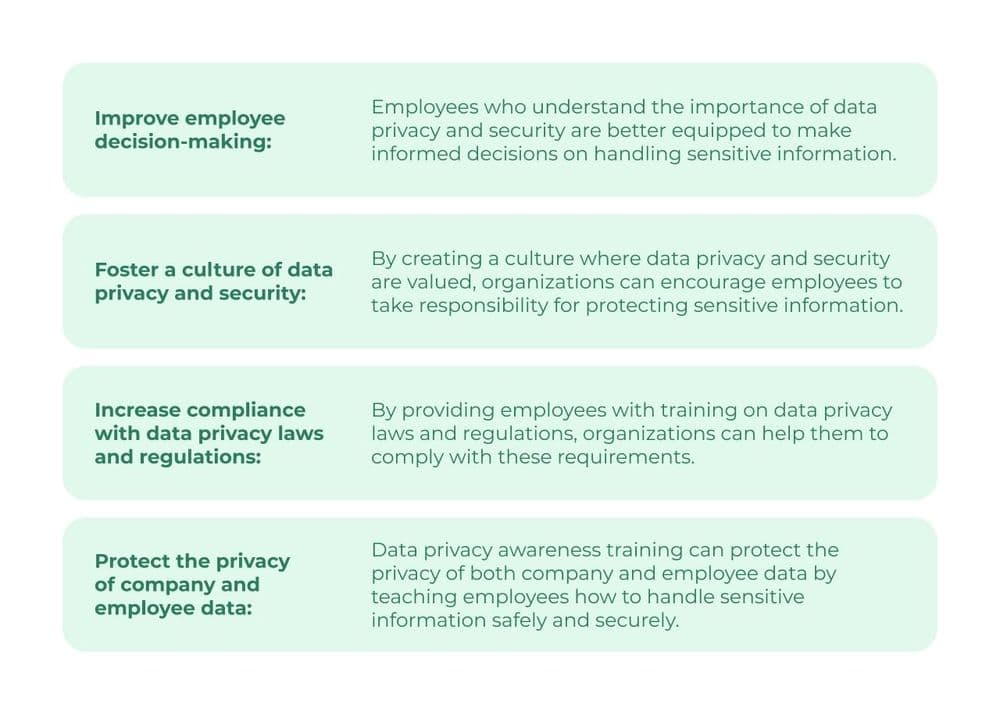

Creating a privacy-first AI culture requires more than just installing security tools—it demands a comprehensive approach that empowers your entire organization. Think of it like learning to drive: you need both the knowledge of traffic rules and the muscle memory of safe habits.

Start with regular employee training programs that go beyond checkbox compliance. Your team needs practical, ongoing education about recognizing sensitive data and understanding AI's unique privacy risks. According to compliance experts, effective training bridges the gap between emerging technologies and safe workplace application, especially as AI systems continuously evolve.

For immediate protection during daily AI interactions, tools like Caviard.ai provide frontline defense. This browser extension automatically detects and redacts over 100 types of personal information before you submit prompts to ChatGPT or other AI services—all processing happens locally, so your data never leaves your machine. The toggle feature lets you verify what's being shared while maintaining context in your conversations.

Develop comprehensive policies that address:

- Clear guidelines on what data employees can share with AI tools

- Procedures for auditing AI systems regularly to ensure compliance

- Transparent processes that give employees visibility into automated decisions

- Balance between innovation speed and security requirements

According to privacy frameworks, modern policies need principles, not just data lists—focusing on accountability, transparency, and employee empowerment. Regular assessments ensure your AI systems remain aligned with both regulatory requirements and organizational values, creating sustainable innovation without compromising data integrity.

Conclusion: Take Control of Your AI Privacy Today

Dynamic injection isn't just a technical solution—it's your frontline defense in an era where 82% of AI privacy incidents involve cloud systems. The question isn't whether sensitive data will be exposed, but when. Every prompt you submit without protection is a postcard traveling through servers you don't control, readable by systems you can't audit.

The good news? You don't need enterprise-level security teams or months of implementation. Browser-based solutions like Caviard.ai give you instant protection—detecting 100+ types of PII in real-time, masking everything from credit cards to addresses before data leaves your machine. All processing happens locally in your Chrome browser, ensuring zero external data transmission while you work seamlessly with ChatGPT or DeepSeek.

Your next steps are simple: Install privacy controls today, train your team on recognizing sensitive data, and establish clear AI usage policies. Don't wait for a breach to force action. The tools exist now—use them before your PII becomes another statistic in next year's security reports. Your data, your rules, starting today.