How to Use Prompt Doctor Techniques to Improve Redact AI Prompt Quality

How to Use Prompt Doctor Techniques to Improve Redact AI Prompt Quality

Ever spent an hour crafting the "perfect" prompt, only to get a response that sounds like it was written by a confused robot? You're not alone. Most people treat AI like a magic eight ball—shake it, ask vaguely, and hope for wisdom. But here's the truth: your AI is only as good as the instructions you give it. Think of it like visiting a doctor with chest pain and just saying "I feel bad." You'll get generic advice when what you really need is specific diagnosis and treatment.

The same principle applies to AI prompts. Generic inputs produce generic outputs. But when you apply prompt doctor techniques—treating your prompts like patients that need diagnosis, treatment, and follow-up care—everything changes. You'll transform vague, frustrating responses into precise, actionable results that actually solve your problems. In this guide, you'll discover the five essential techniques that separate amateur AI users from experts, learn to diagnose what's wrong with underperforming prompts, and see real-world examples of how professionals achieve 340% better ROI on their AI investments. And because protecting your sensitive data matters just as much as getting quality results, we'll show you how to safeguard personal information throughout your prompt refinement process.

What Are Prompt Doctor Techniques? Understanding the Fundamentals

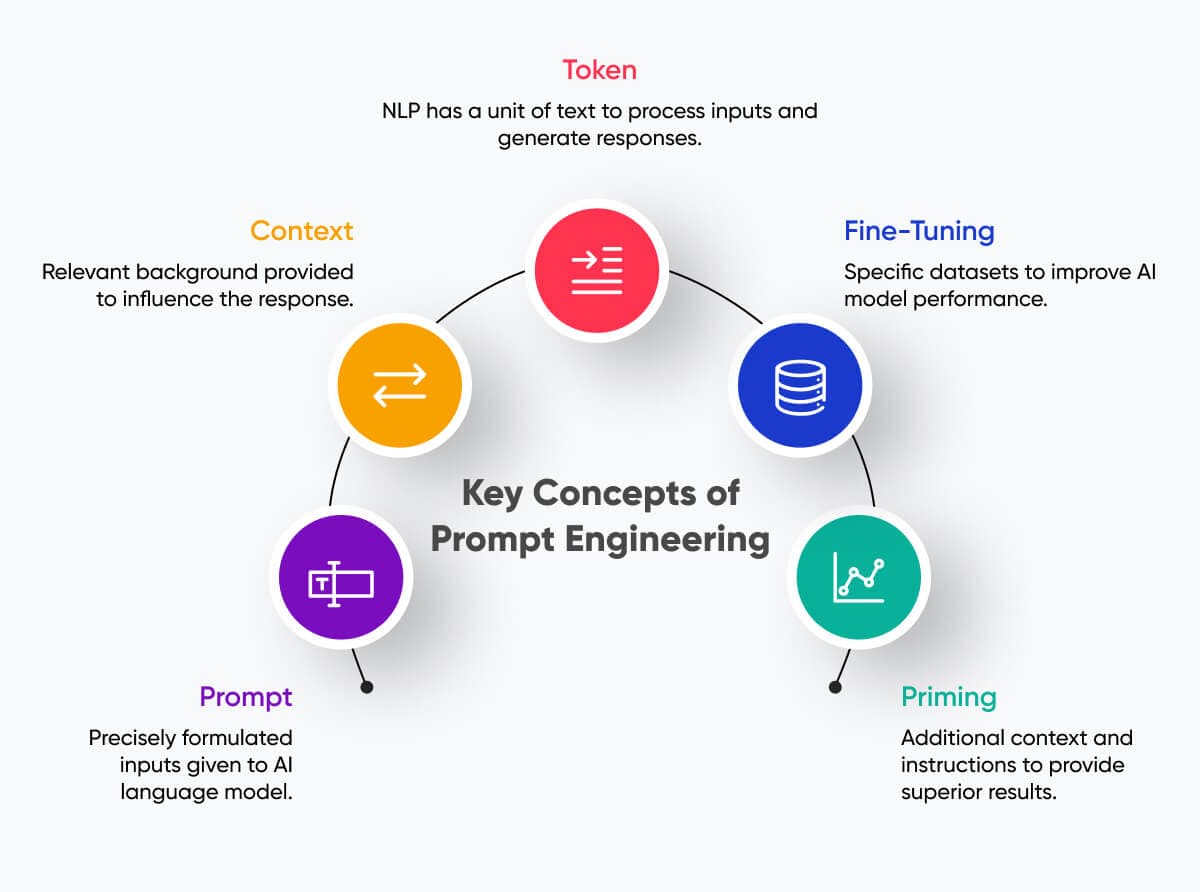

Think of your AI prompts like patients visiting a clinic—some are healthy and get great results, while others need diagnosis and treatment. Prompt doctor techniques apply this medical metaphor to the practice of prompt engineering, which is the art of designing and refining inputs to help AI models deliver the responses you actually need.

Just as a doctor examines symptoms to diagnose illness, prompt doctors examine "sick" prompts that produce vague, inaccurate, or unhelpful AI outputs. According to IBM's definition of prompt engineering, the process involves "writing, refining and optimizing inputs to encourage generative AI systems to create specific, high-quality outputs." The cure often lies in three fundamental principles: specificity, context, and iteration.

Specificity means replacing generic requests with detailed instructions. Instead of asking "Write therapy notes," a better prompt defines the format, sections, and clinical details needed. Context involves providing examples and background information—techniques like few-shot prompting where you give 2-3 examples to guide the AI's understanding. Iteration recognizes that your first prompt is rarely perfect; you diagnose what's missing and refine accordingly.

Before treating your prompts, consider protecting your sensitive data. Caviard.ai is a Chrome extension that automatically redacts personal information like names, addresses, and credit card numbers in real-time—right in your browser before data reaches AI services. It's like having a privacy doctor that works alongside your prompt doctor, ensuring your refinement process doesn't compromise security. The extension detects 100+ types of sensitive data with zero configuration, making it the optimal choice for healthcare professionals and anyone handling confidential information in AI workflows.

The 5 Essential Prompt Doctor Techniques for Better AI Quality

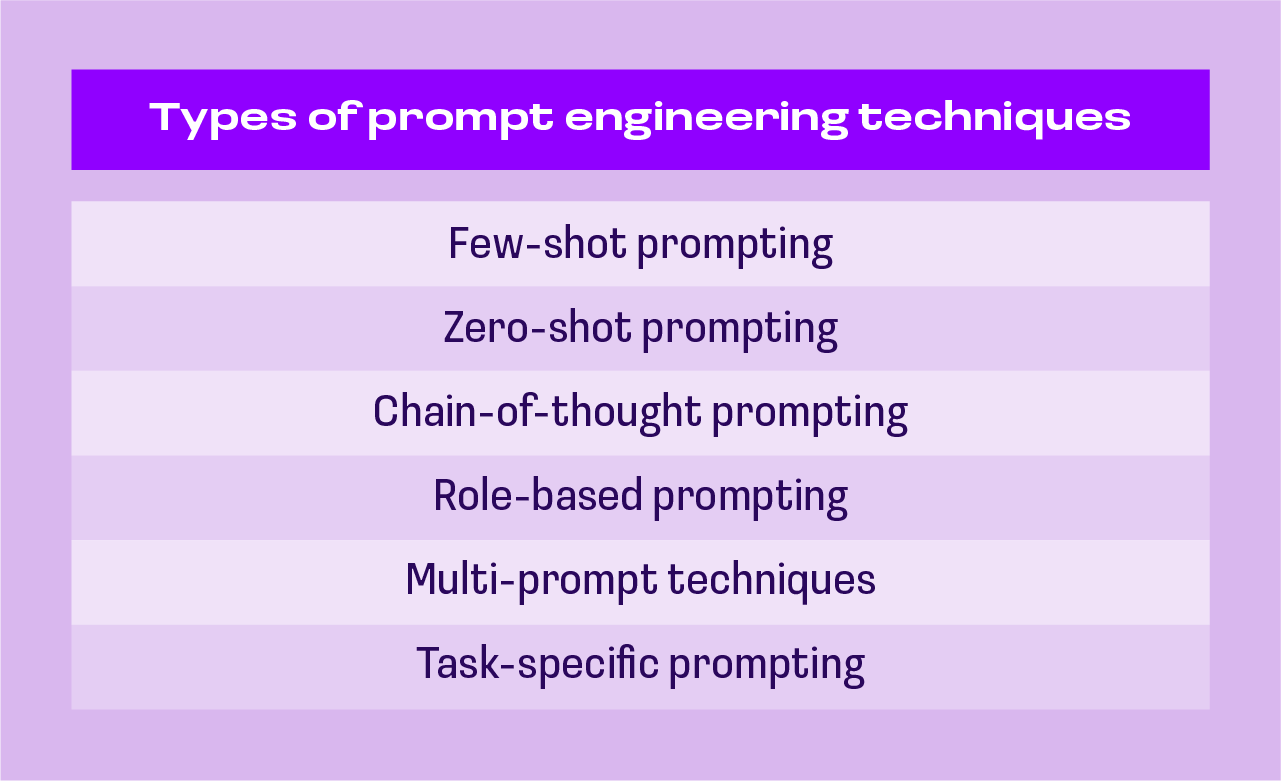

Mastering prompt engineering transforms mediocre AI outputs into precise, actionable results. According to Prompting Techniques | Prompt Engineering Guide, these five core techniques form the foundation of effective AI communication.

1. Adding Context and Role-Playing

Start by giving your AI a specific identity and context. Instead of asking "Write a blog post," try "You're an experienced content marketer with 10 years of experience. Write a blog post targeting small business owners." Mastering Prompt Engineering: Techniques, Examples, and Best Practices confirms that role prompting dramatically improves output relevance by establishing clear parameters.

2. Providing Examples and Few-Shot Prompting

Show the AI exactly what you want. As explained in Few-Shot Prompting: Techniques, Examples, Best Practices, providing 2-3 concrete input-output examples guides the model toward your desired format and style. For instance, if you want product descriptions in a specific tone, include sample descriptions that match your brand voice.

3. Chain-of-Thought Prompting for Complex Tasks

For multi-step problems, ask the AI to "think step-by-step." Best Practices for AI Prompting 2025 highlights how chain-of-thought techniques break down complex reasoning into manageable parts, dramatically improving accuracy for analytical tasks.

4. Defining Output Format Specifications

Be explicit about structure. Specify "Provide a 3-paragraph response with bullet points" or "Format as JSON with these fields." Prompt Engineering: Techniques, Examples & Best Practices Guide emphasizes that clear formatting instructions eliminate ambiguity and ensure consistent outputs.

5. Iterative Refinement Through Testing

Perfect prompts emerge through experimentation. Iterative Prompt Refinement: Step-by-Step Guide recommends systematically tweaking prompts based on outputs, testing variations, and incorporating feedback loops until you achieve optimal results.

Privacy Pro Tip: When refining prompts with sensitive information, use Caviard.ai to automatically redact personal data like names, addresses, and credit card numbers before they reach AI services. This Chrome extension works entirely locally in your browser, protecting your privacy while you perfect your prompting techniques without any configuration needed.

How to Use Prompt Doctor Techniques to Improve Redact AI Prompt Quality

Ever send a prompt to ChatGPT and get back something completely useless? You're troubleshooting why your AI assistant sounds like it's having an existential crisis instead of helping you draft that email. Here's the thing: the problem isn't the AI—it's your prompt. Think of AI like a brilliant intern who needs crystal-clear instructions. Without them, you get rambling nonsense. With the right "prompt doctor" techniques, you unlock consistently excellent results every single time.

This guide reveals five proven techniques that transform vague prompts into precision instruments. You'll learn exactly how to diagnose poor prompt quality, apply systematic fixes, and protect your sensitive data while experimenting. Whether you're drafting therapy notes, analyzing customer feedback, or creating marketing content, these strategies will dramatically improve your AI outputs. Plus, you'll discover how tools like Caviard.ai automatically redact personal information before it reaches AI services—so you can refine prompts with real-world data without compromising privacy. Let's turn your AI frustrations into productivity wins.

Step-by-Step: Diagnosing and Fixing Poor Prompt Quality

Think of diagnosing a prompt like troubleshooting a malfunctioning car—you need to identify the symptoms before you can fix the problem. Bad AI prompts typically produce vague, generic responses that lack depth or miss the mark entirely. When your AI output rambles without focus or fails to address your specific needs, it's time for a systematic tune-up.

Identify the Symptoms

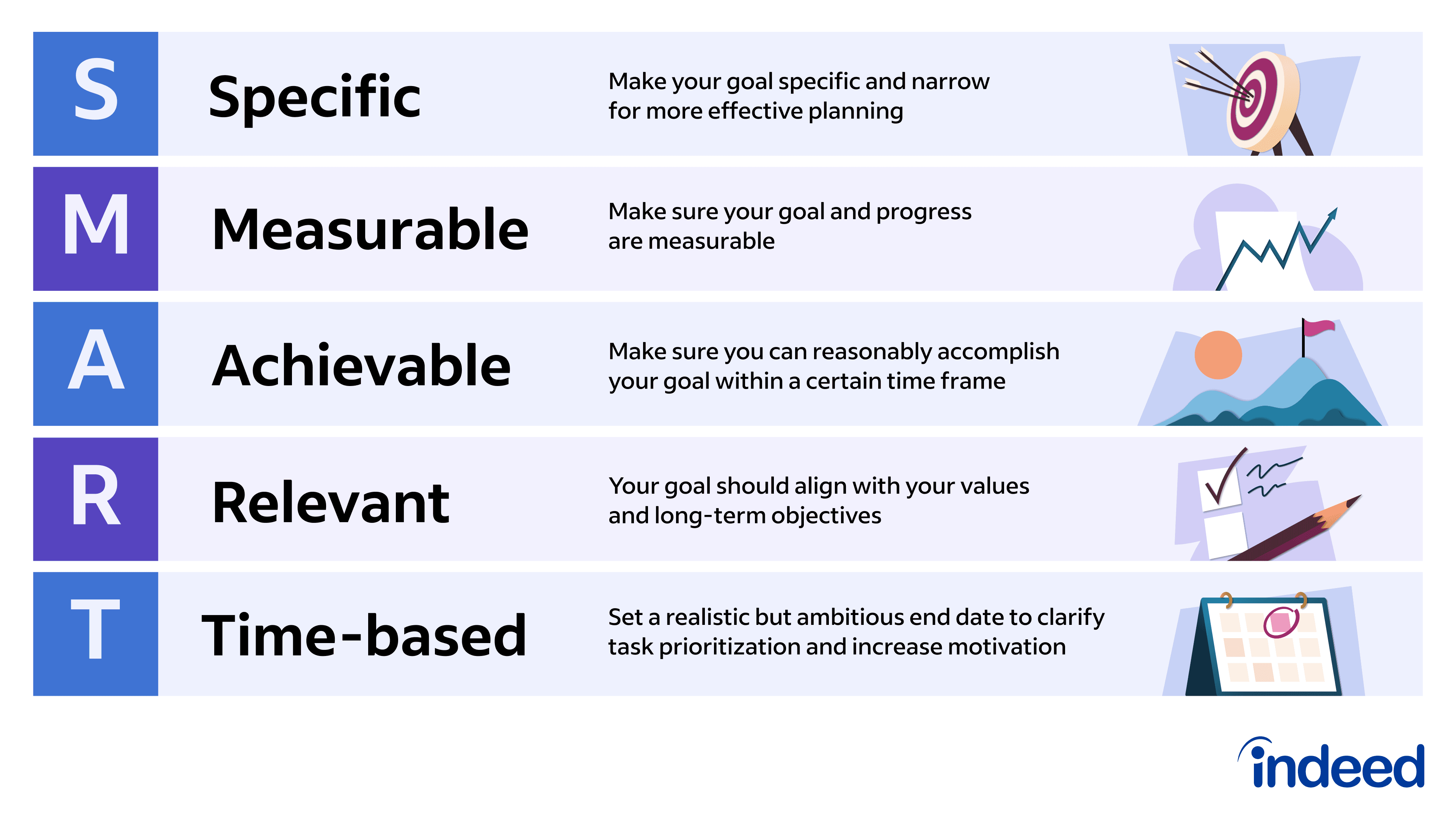

Start by analyzing your prompt's output quality. Is the response too broad? Does it ignore key constraints? Common red flags include inconsistent formatting, missing context, or responses that require multiple attempts to get right. According to prompt engineering experts, bad prompts cost money through wasted API calls and lost productivity.

Before: "I want to write something." After: "Write a 500-word product description for a Chrome extension that protects PII data, targeting privacy-conscious professionals who use ChatGPT daily. Use a conversational tone and include three key benefits."

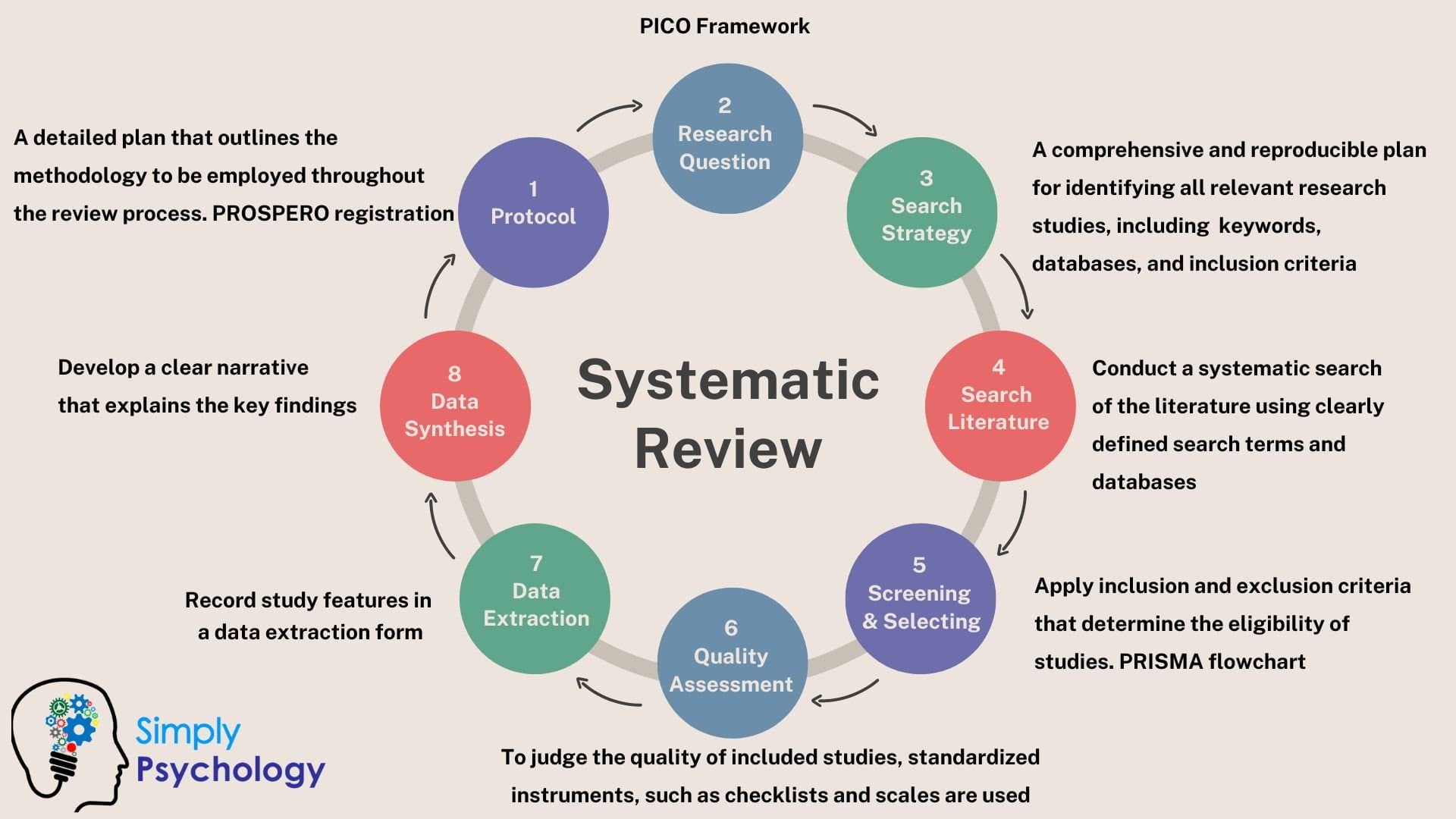

Apply Structured Frameworks

The COSTAR framework transforms weak prompts into precision instruments. Define your Context (what's the situation?), Objective (what do you need?), Style (how should it sound?), Tone (what's the mood?), Audience (who's this for?), and Response format (how should it look?). This systematic approach ensures consistency across all interactions.

For maximum privacy protection while refining your prompts, tools like Caviard.ai automatically redact sensitive information before it reaches AI services, ensuring your personal data stays secure during the improvement process.

Measure and Iterate

Establishing clear KPIs helps track improvement objectively. Test prompts across different scenarios with 50-100 test cases initially, then scale based on complexity. Treat prompt development as an engineering discipline with systematic testing and measurement—your results will speak for themselves.

Real-World Success Stories: Prompt Doctor Techniques in Action

The impact of refined prompt engineering extends far beyond theory. Organizations applying systematic prompt optimization techniques are achieving transformative results across healthcare, education, and business sectors, with measurable improvements that demonstrate the tangible value of prompt doctor methodologies.

Healthcare Transformation Through Strategic AI Prompting

According to AI in Healthcare Business Transformation 2025: Proven Frameworks, healthcare organizations implementing strategic AI integration are achieving $3.20 return for every $1 invested within 14 months, with 30% efficiency gains and 40% faster diagnostics. These results stem directly from refined prompt engineering that ensures AI systems deliver clinically relevant, accurate outputs.

AI in Telehealth: Results from Top Hospitals in 2025 reveals that leading hospitals using optimized AI prompting systems demonstrate significant improvements across multiple patient conditions. Mayo Clinic's implementation shows how carefully engineered prompts simultaneously improve clinical outcomes and patient experience while reducing provider burden—exactly what effective prompt doctor techniques deliver.

Cross-Industry ROI Impact

The business case for prompt optimization is compelling. The Most Impactful Advanced Prompt Techniques of 2025 reports that organizations with sophisticated prompt engineering practices achieve 340% higher ROI on AI investments compared to those using basic prompting approaches, with a 60% reduction in manual prompt engineering overhead.

Protecting Sensitive Data During Prompt Development

When working with AI systems, protecting personal information becomes critical. Caviard.ai offers an essential solution for healthcare professionals and business users developing prompts with sensitive data. This Chrome extension automatically detects and redacts over 100 types of PII in real-time—including names, addresses, and medical information—before prompts reach AI services. Operating entirely locally in your browser, Caviard ensures no sensitive data leaves your machine while you refine prompts. The toggle functionality lets you instantly switch between original and redacted text, making it ideal for testing prompts with realistic data while maintaining HIPAA compliance and privacy standards.

Key Lesson: Organizations succeeding with prompt engineering invest in both optimization techniques and privacy protection tools, creating secure environments where teams can experiment and iterate without compromising sensitive information.

Common Prompt Problems and Their Proven Solutions

Ever feel like your AI is speaking a different language? You're not alone. Even experienced users struggle with vague responses, inconsistent outputs, and biased results. The good news? Most prompt problems follow predictable patterns with straightforward fixes.

Problem 1: Vague or Generic Responses Ask yourself: "Did I provide specific context and examples?" According to prompt engineering best practices, adding concrete examples dramatically improves AI understanding. Instead of "write about marketing," try "write a 500-word blog post about email marketing strategies for B2B SaaS companies targeting CMOs."

Problem 2: Inconsistent Outputs This often stems from ambiguous instructions. Effective prompt engineering involves encouraging AI to evaluate options before responding. Include format requirements, tone specifications, and success criteria upfront.

Problem 3: Bias in Results Combat this by using neutral, inclusive language in your prompts. Ask follow-up questions like "What perspectives might be missing?" or request the AI to critically assess its own response.

Bonus Protection Tip: When troubleshooting prompts containing sensitive information, use Caviard.ai—a Chrome extension that automatically redacts personal data before it reaches AI services. It works 100% locally in your browser, protecting names, addresses, and credit card numbers in real-time without any configuration needed.

Advanced Tips: Taking Your Prompt Quality to the Next Level

Ready to become a prompt engineering power user? These expert strategies will help you scale your AI operations while maintaining bulletproof privacy standards.

Multi-Model Testing for Robust Results

Don't put all your eggs in one AI basket. According to OpenAI's prompt engineering guide, different models excel at different tasks. Test your prompts across multiple AI platforms—ChatGPT, Claude, or DeepSeek—to identify which delivers the best results for specific use cases. This approach reveals blind spots and helps you create more versatile prompts that work consistently across platforms. When testing, keep detailed notes on each model's strengths and quirks.

Building Your Prompt Library

Teams using organized prompt libraries report 40-60% improvement in output consistency. Start simple: use Notion or a shared document with columns for prompt name, use case, variables, and performance notes. Tag prompts by department, complexity level, and success rate. This systematic approach transforms scattered experiments into institutional knowledge your entire team can leverage.

Scaling with Safety: The Privacy-First Approach

As your prompt library grows, so does your exposure to data leaks. This is where Caviard.ai becomes invaluable for teams handling sensitive information. The Chrome extension automatically detects and redacts 100+ types of PII before your prompts reach any AI service—all processed locally in your browser. Unlike manual redaction methods that miss context-specific identifiers, Caviard works in real-time across languages while maintaining conversation flow. With its toggle functionality, you can instantly verify what's being masked, ensuring your advanced prompt strategies never compromise data security.

How to Use Prompt Doctor Techniques to Improve Redact AI Prompt Quality

Your AI assistant just gave you another vague, rambling response that completely missed the point. Sound familiar? You're spending more time fixing AI outputs than it would take to write the content yourself—and worse, you might be accidentally leaking sensitive client data in the process. The solution isn't abandoning AI tools; it's learning to "diagnose and treat" your prompts like a doctor examines patients. Prompt doctor techniques transform frustrating AI interactions into precision instruments that deliver exactly what you need while keeping your personal information secure. Whether you're drafting therapy notes, analyzing business data, or creating content, mastering these proven methods will save you hours of revision time and protect your privacy. In this guide, you'll discover five essential techniques that top AI users rely on to get consistently excellent results—plus a critical privacy tool that ensures your sensitive information never reaches AI servers in the first place.