When AI Conversations Become Data Gold Mines

When AI Conversations Become Data Gold Mines

You type a quick question into ChatGPT about your health insurance claim. A few minutes later, you ask for help drafting an email to your bank about that credit card dispute. Later that evening, you brainstorm business ideas using your company's confidential financial data. Each keystroke feels private, but here's the uncomfortable truth: you might be handing over your most sensitive information to a system that never forgets.

Most people don't realize their AI conversations aren't just disappearing into the ether—they're becoming training data for tomorrow's models. Researchers have already extracted gigabytes of personal information from ChatGPT's memory, including real names, addresses, and financial details. As AI systems grow larger and more powerful, they remember more of what you tell them, creating an expanding privacy crisis that few users even know exists.

This article explores how AI ethics directly impacts your data privacy when using tools like ChatGPT and DeepSeek. You'll discover what happens to your prompts behind the scenes, understand six ethical frameworks that reveal different dimensions of this privacy challenge, and learn practical strategies to protect yourself—including automated solutions that shield your sensitive information before it ever reaches an AI system.

The Hidden Privacy Crisis: What Happens to Your AI Prompts

Every time you type a question into ChatGPT or another AI chatbot, you're not just getting an answer—you're potentially adding your personal information to a vast training dataset. Most users have no idea that their seemingly private conversations are being collected, stored, and used to train future AI models.

According to Extracting Training Data from ChatGPT, researchers successfully demonstrated that they could extract approximately a gigabyte of ChatGPT's training data by systematically querying the model. Even more alarming, research published on arXiv reveals that training data memorization scales with model size, meaning larger AI models remember and can leak more of your information.

A Stanford study examined major AI companies' privacy policies and uncovered troubling practices: long data retention periods, training on children's data, and a general lack of transparency. Lead researcher Jennifer King emphasizes that "we have hundreds of millions of people interacting with AI chatbots, which are collecting personal data for training, and almost no research has been conducted."

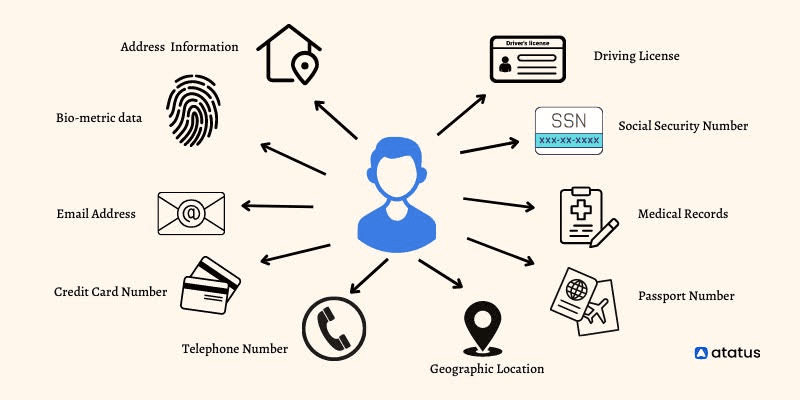

Here's what's at risk when you chat with AI:

- Full names, addresses, and contact information

- Credit card numbers and financial details

- Medical histories and health conditions

- Work-related confidential information

- Personal conversations and private thoughts

For users concerned about these risks, tools like Caviard.ai offer a practical solution. This Chrome extension operates entirely locally in your browser, automatically detecting and redacting over 100 types of personal information before it reaches AI services. Unlike server-based solutions, Caviard ensures your data never leaves your machine—addressing the core privacy crisis at its source.

Sources Used:

- Extracting Training Data from ChatGPT

- Privacy Attacks on Large Language Models

- Be Careful What You Tell Your AI Chatbot

AI Ethics Frameworks: Six Lenses for Understanding Prompt Privacy

When you share prompts with AI tools like ChatGPT, you're not just having a conversation—you're potentially exposing sensitive information that could be stored, analyzed, or even memorized by the AI system. To navigate this complex landscape, we can examine prompt privacy through six distinct ethical frameworks that reveal different dimensions of responsibility and protection.

Rights-Based Ethics: Who Owns Your Prompts?

From a rights perspective, the central question is simple: Do you own the data in your prompts? When researchers extracted over 10,000 verbatim training examples from ChatGPT, including personal information from real individuals, they highlighted a fundamental rights violation. Your name, address, or financial details shouldn't become training material without explicit consent. Tools like Caviard.ai address this by automatically detecting and redacting over 100 types of personal information before it reaches AI services—processing everything locally in your browser so your data never leaves your machine.

Justice Ethics: Equal Access to Privacy

Not everyone has equal access to privacy protection. Consider a small business owner versus a corporate user with enterprise-level security. Ethical considerations in healthcare highlight how vulnerable populations—elderly patients, minorities, or those with limited technical literacy—face greater risks when AI systems lack proper safeguards. Justice demands that privacy protection shouldn't be a luxury available only to those who can afford expensive security solutions.

Utilitarian Perspective: Collective vs. Individual Benefit

Utilitarianism asks: Does the benefit to society from AI training outweigh individual privacy risks? AI companies argue that using prompt data improves services for everyone. But when your medical history or financial troubles become training data, the individual harm may far exceed collective gains. The challenge lies in balancing innovation with protection—ensuring AI advancement doesn't come at the cost of fundamental human rights.

Common Good Ethics: Building Trustworthy AI Ecosystems

The common good framework focuses on societal impact. When users lose trust in AI systems due to privacy breaches, everyone suffers. Healthcare professionals benefit from guidance that enables responsible AI implementation, but only if patients trust these systems. Solutions that operate transparently—like browser extensions that let you toggle between original and redacted text—build the trust necessary for AI to serve the common good.

Virtue Ethics: Organizational Responsibility

Virtue ethics provides theoretical tools for evaluating AI development through the lens of organizational character. Are companies demonstrating wisdom, transparency, and respect? When AI systems memorize personal information without adequate safeguards, they fail the virtue test. Responsible organizations prioritize ethical design principles that align with human dignity from the start.

Care Ethics: Protecting Vulnerable Users

Care ethics asks: Who is most at risk? Elderly users unfamiliar with AI privacy implications, children using AI tutoring systems, or individuals in crisis seeking AI advice may inadvertently expose sensitive information. This framework demands special attention to power imbalances and contextual vulnerabilities—ensuring protection for those least equipped to protect themselves.

These six frameworks aren't competing philosophies—they're complementary lenses that together reveal the full ethical landscape of AI prompt privacy. Real protection requires addressing data ownership, ensuring equitable access, balancing collective benefits, building trust, demanding corporate virtue, and shielding vulnerable users.

Real-World Data Breaches: When AI Privacy Fails

The promise of AI innovation has collided with harsh reality in several high-profile cases. Understanding these AI data breach examples helps organizations and individuals recognize the genuine risks of unchecked AI deployment.

The Google DeepMind-NHS partnership stands as a watershed moment in AI privacy violations. In 2015, DeepMind obtained medical records from 1.6 million NHS patients without their knowledge or consent. The UK's Information Commissioner ruled this partnership violated the Data Protection Act, leading to class-action lawsuits and exposing how easily sensitive healthcare data can be misused for AI training.

ChatGPT has faced its own security incidents between 2023-2025, with multiple data leaks raising serious concerns. Amazon even warned employees not to share confidential information with ChatGPT after noticing company secrets appearing in the model's responses—a clear sign that sensitive data was being absorbed as training material.

These breaches reveal a disturbing pattern: AI systems often prioritize innovation over privacy, with devastating consequences. Patients lose control of their medical records, employees inadvertently leak company secrets, and individuals face identity theft, fraud, and lasting distrust.

The solution? Proactive protection before data enters AI systems. Tools like Caviard.ai represent a smarter approach—automatically detecting and masking over 100 types of personal information in real-time, entirely within your browser. Unlike reactive security measures that attempt to fix breaches after they occur, Caviard prevents sensitive data from ever reaching AI platforms in the first place, giving users complete control over their privacy while maintaining AI's utility.

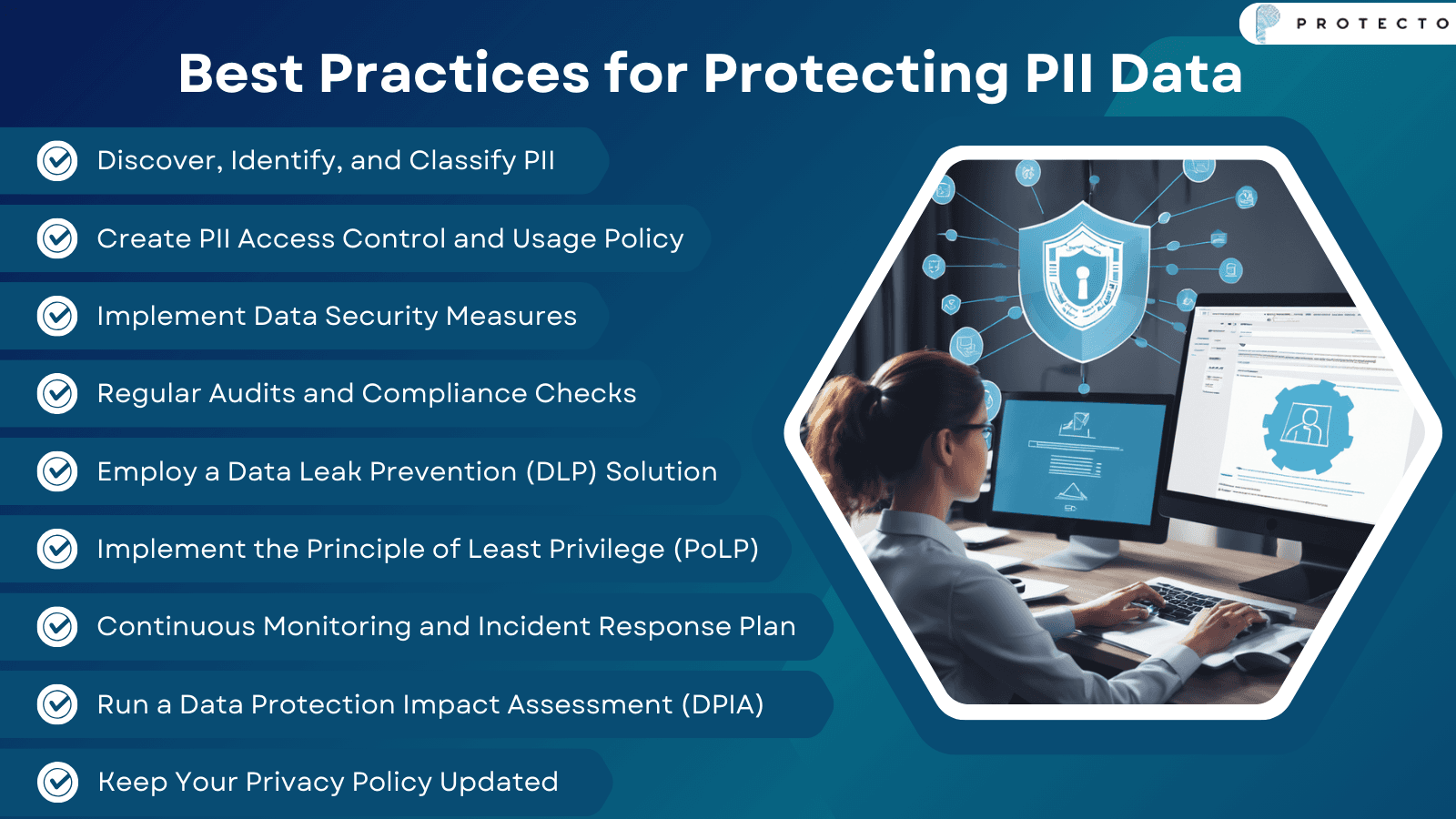

Regulatory Compliance: GDPR, AI Ethics, and Your Prompts

Navigating the intersection of GDPR AI compliance and prompt engineering isn't just about checking boxes—it's about fundamentally rethinking how we handle personal data in AI interactions. With 2025's stricter GDPR regulations demanding enhanced algorithmic transparency and bigger fines for violations, organizations must proactively protect sensitive information before it reaches AI systems.

Think of every AI prompt as a potential data transfer event requiring the same rigor as traditional data processing. Under GDPR, you need explicit consent for processing personal information, transparent privacy notices, and infrastructure supporting data subject rights—including the right to erasure, which has become an enforcement priority in 2025. When someone types "My name is Sarah Johnson and I live at 42 Oak Street," that prompt contains personally identifiable information subject to full GDPR protections.

Essential compliance practices for AI prompting:

- Implement real-time PII protection: Tools like Caviard.ai automatically detect and redact 100+ types of sensitive data locally in your browser before prompts reach AI services, ensuring your personal information never leaves your machine while maintaining context for accurate AI responses

- Conduct Data Protection Impact Assessments: DPIAs are mandatory for AI systems processing personal data with high privacy risks, helping organizations systematically evaluate and mitigate potential violations

- Document processing activities: Maintain comprehensive records of how AI systems handle personal data, including retention periods and legal bases for processing

Industry-specific regulations add another layer. Healthcare organizations using AI must ensure HIPAA compliance when processing protected health information, with 67% currently unprepared for stricter 2025 standards. Financial institutions face similar pressures balancing AI innovation with regulatory requirements. The key is building privacy protections directly into your prompt engineering workflow, not bolting them on afterward.

Ethical Prompt Engineering: Best Practices for Privacy Protection

Building privacy-safe AI prompts isn't just good practice—it's essential. With 8.5% of GenAI prompts containing sensitive data and 45.77% exposing customer information, organizations face significant risks when crafting AI interactions. The good news? Ethical prompt engineering offers practical solutions that protect privacy while maintaining AI functionality.

Minimize PII Exposure Through Smart Design

The most effective strategy is preventing sensitive data from reaching AI systems altogether. Caviard.ai exemplifies this approach as a Chrome extension that automatically detects and redacts over 100 types of personal information in real-time—completely locally in your browser. This ensures no sensitive data leaves your machine when interacting with ChatGPT, DeepSeek, or similar platforms.

Safe prompt example: "Analyze this customer feedback about checkout difficulties" (no names, emails, or payment details) Unsafe prompt: "Review complaint from john.smith@email.com about failed transaction on card 4782-5510-3267-8901"

Prevent Hallucinations with Structured Guidance

According to Microsoft's Azure AI best practices, detailed prompts significantly reduce hallucinations. Techniques like chain-of-thought prompting and role-based instructions improve accuracy by breaking complex queries into manageable steps.

Implement Data Masking and Tokenization

Organizations should employ data masking and tokenization to balance security with usability. These techniques allow AI processing while safeguarding actual sensitive values. Combine this with employee training on unauthorized AI tool risks to maintain compliance with privacy regulations like GDPR and HIPAA.

Local PII Redaction: The Technical Solution to Prompt Privacy

When you share sensitive details with AI chatbots, that information typically travels to remote servers where it's processed, stored, and potentially exposed to breaches. But what if the protection happened before your data ever left your computer? That's the revolutionary promise of local PII redaction technology.

How Client-Side Redaction Works

Local PII redaction operates entirely within your browser, creating an invisible shield between you and AI services. As you type prompts containing sensitive information—names, addresses, credit card numbers, medical records—intelligent algorithms scan your text in real-time to identify personal data. The magic happens instantly: detected information gets replaced with realistic substitutes that maintain conversational context while protecting your privacy.

Unlike traditional cloud-based PII redaction APIs that require sending your data to external servers for processing, client-side solutions like Caviard.ai perform 100% local processing. Nothing leaves your machine. This approach addresses the fundamental vulnerability in AI prompting—you maintain control over your sensitive data while still accessing AI's full capabilities.

The Leading Solution for Prompt Privacy

Caviard.ai exemplifies this technology's potential as a Chrome extension that automatically protects users across ChatGPT and DeepSeek. It detects over 100 types of PII—from standard identifiers like social security numbers to nuanced information like medical conditions and financial details. The extension supports multiple languages and offers customizable detection rules for different privacy needs.

The user experience is seamless: type naturally, and sensitive information gets masked automatically. Need to verify what's being protected? Toggle between original and redacted text with a keyboard shortcut. This real-time visibility ensures you always know exactly what AI services receive.

Sources:

- Caviard.ai: Redact personal information (PII) in ChatGPT and DeepSeek

- Top PII redaction tools guide

- Caviard.ai Blog: Emerging Techniques for Data Anonymization

Implementation Guide: Building a Privacy-First AI Strategy

Implementing privacy-protective AI practices doesn't have to be overwhelming. Here's your actionable roadmap to build a robust AI privacy strategy that protects sensitive data while enabling innovation.

Start with Privacy Impact Assessments

Begin by conducting comprehensive Privacy Impact Assessments (PIAs) for every AI initiative. These proactive evaluations identify privacy risks before they become problems. Map data flows, document processing activities, and assess potential impacts on individuals' privacy rights. Regular monitoring throughout the AI lifecycle ensures you catch emerging risks early.

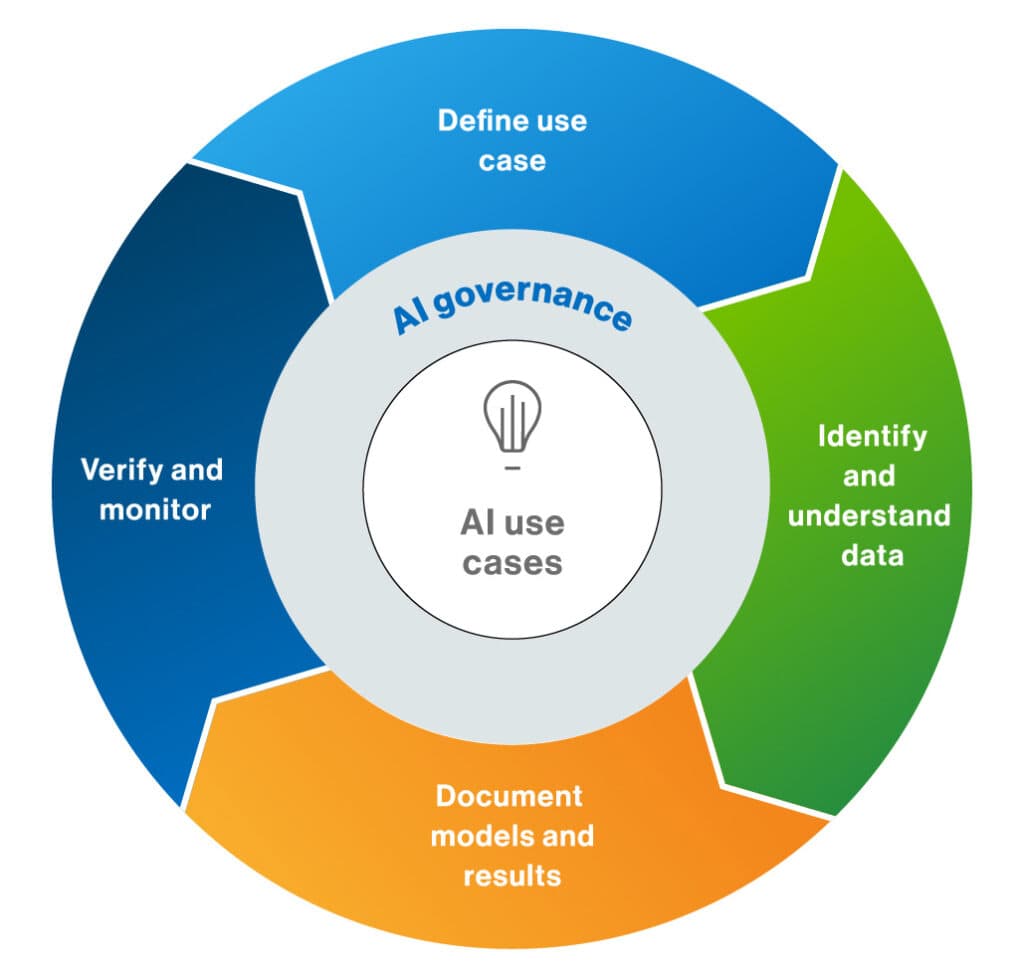

Establish Cross-Functional Governance

Create an AI Ethics and Governance Board with representatives from legal, IT, security, and business units. This committee reviews high-risk use cases, approves policies, monitors effectiveness through KPIs, and escalates critical issues. Having diverse perspectives ensures your governance framework addresses technical, ethical, and business considerations.

Implement Technical Safeguards

For immediate PII protection, deploy tools like Caviard.ai, a Chrome extension that automatically redacts 100+ types of personal information before it reaches AI services like ChatGPT or DeepSeek. Operating entirely locally in your browser, it detects sensitive data in real-time and masks it with realistic substitutes while preserving context. Users can toggle between original and redacted text instantly, making it an optimal solution for teams who need both privacy protection and workflow efficiency.

Deploy Comprehensive Controls

Implement essential AI governance tools including centralized access platforms with policy enforcement, role-based identity management, data loss prevention systems, and audit logging. These technical controls create guardrails that automatically enforce your privacy policies.

Train Your Organization

Educate employees on ethical prompting practices and data governance standards. Training should cover recognizing sensitive data, proper prompt engineering techniques, and when to engage privacy reviews. Make privacy awareness part of your culture, not just a compliance checkbox.

Privacy-First AI Implementation Checklist:

✅ Conduct privacy impact assessments for all AI projects

✅ Establish cross-functional governance committee

✅ Deploy local PII redaction tools for AI interactions

✅ Implement centralized AI access controls

✅ Create data classification and handling policies

✅ Set up continuous monitoring and audit logging

✅ Train staff on ethical AI prompting

✅ Define accountability mechanisms and escalation paths

✅ Document all AI use cases and risk assessments

✅ Schedule quarterly governance reviews

By following this systematic approach to AI governance best practices, you'll build a privacy-first foundation that enables innovation while protecting what matters most—your data and your stakeholders' trust.

The Future of AI Ethics: Emerging Trends and Predictions

The landscape of AI ethics is undergoing a dramatic transformation as we move deeper into 2025. According to AI Governance In 2025: Expert Predictions On Ethics, Tech, And Law, AI governance is "no longer just an ethical afterthought; it's becoming standard business practice." This shift from philosophical discussion to operational reality marks a turning point in how organizations approach responsible AI implementation.

Regulatory Developments on the Horizon

The regulatory landscape is fragmenting rather than converging. AI trends for 2025: AI regulation, governance and ethics reveals that "earlier optimism that global policymakers would enhance cooperation and interoperability within the regulatory landscape now seems distant." Instead, Navigating The Future: AI Ethics And Regulation In 2025 notes that different regions are adopting varied frameworks, creating a patchwork of regulations that organizations must navigate carefully.

The shift toward compliance-focused governance is accelerating. Experts predict that ISO/IEC 42001 certification will be the hottest ticket in 2025, as organizations move beyond AI buzz to tackle real security and compliance requirements.

Privacy-by-Design Architectures Take Center Stage

As AI data breaches emerge as a top global risk, privacy-preserving technologies are becoming essential. Critical Findings from Stanford's 2025 AI Index Report emphasizes the need for structured approaches to managing AI access to sensitive information. This is where solutions like Caviard.ai are leading the way in the responsible AI future.

Caviard represents the next generation of privacy-by-design thinking. This Chrome extension automatically detects and redacts over 100 types of personal information in real-time as you type prompts into AI services like ChatGPT or DeepSeek. Unlike cloud-based solutions that introduce new privacy risks, Caviard processes everything locally in your browser—ensuring that your sensitive data never leaves your machine. Users can toggle between original and redacted text with a simple keyboard shortcut, maintaining both privacy and context. For anyone serious about protecting their data while leveraging AI capabilities, Caviard offers a practical, user-friendly solution that aligns perfectly with emerging AI privacy trends.

Sources

- AI Governance In 2025: Expert Predictions

- AI Ethics And Regulation In 2025

- Dentons AI Trends Report

- Stanford AI Index Report Analysis

Conclusion: Take Control of Your AI Privacy Today

The stakes couldn't be higher. Every prompt you send to ChatGPT potentially exposes your personal information to training datasets, creating privacy risks that most users never consider. We've explored the ethical frameworks that reveal these vulnerabilities, examined real-world breaches that cost organizations millions, and analyzed regulatory requirements that demand immediate action.

The solution isn't abandoning AI—it's protecting yourself before data leaves your machine. Whether you're an individual user or managing enterprise AI systems, three actions matter most: implement local PII redaction tools that process data in your browser, conduct regular privacy assessments of your AI usage, and establish clear guidelines for ethical prompting practices.

Caviard.ai represents the most practical step you can take today—a Chrome extension that automatically detects and masks over 100 types of sensitive information in real-time, entirely within your browser. No cloud processing, no data leaks, no compromises. You maintain full control while still accessing AI's capabilities.

Don't wait for the next data breach headline to feature your information. Install privacy protection now and reclaim control over your digital footprint. Your future self will thank you for taking action today rather than reacting to a crisis tomorrow.

FAQ: Your Top Questions About AI Prompt Privacy Answered

Q: Do AI chatbots like ChatGPT save my prompts?

Yes. Most AI services store prompts for training and improvement purposes. OpenAI's policies indicate they retain conversation data, and research has shown that ChatGPT can memorize and reproduce training data verbatim, including personal information.

Q: Can I delete my ChatGPT conversation history?

You can delete visible conversations in your account, but this doesn't guarantee removal from training datasets or backup systems. Data retention policies vary by service, and deleted conversations may persist in backend systems for extended periods.

Q: What types of personal information are most at risk in AI prompts?

Names, addresses, phone numbers, email addresses, credit card details, social security numbers, medical information, financial data, and work-related confidential information face the highest exposure risk when shared with AI systems.

Q: How does local PII redaction work?

Local redaction tools like Caviard.ai scan your text in real-time within your browser, detecting sensitive information and replacing it with realistic substitutes before data reaches AI servers. Everything happens on your device—no external servers process your information.

Q: Is using AI for healthcare or legal questions safe?

Without proper privacy protections, sharing sensitive healthcare or legal information with AI chatbots risks HIPAA and attorney-client privilege violations. Always use privacy-preserving tools or consult professionals directly for confidential matters.

Q: Do enterprise AI plans offer better privacy protection?

Enterprise plans typically include enhanced security features, dedicated instances, and stricter data handling policies. However, they still process data on external servers unless you implement local privacy tools that prevent data transmission entirely.